ATI has finally released their first Shader Model 3.0 famlily of Shader Model 3.0 supporting chips. With a new and updated architecture, new features and even more performance ATI has closed and in many cases passed NVIDIA again. We’ve not only listened to tons of presentations about the X1000 but also done some extensive benchmarking to give you the low-down on the new cards.

Introduction

How far we have come. In the 8 years I’ve been running this site 3D-acceleration has evolved a lot and it is easy to get a bit jaded when you see a new generation of cards. This time it is a bit more interesting since ATI are introducing their first family of SM3.0 supporting chips as well trying to retake the performance crown from NVIDIA.

I’ve spent 2 days at Ibiza Spain partying listening to ATI presentation and, much more important, testing actual X1000 products and now it is time to give you an idea on what you can expect from ATI’s new line of products coming this autumn.

The X1000 family

Ati today presents not a single card but a whole family of cards. Basically they are introducing the new architecture all the way from the bottom (X1300 series), midrange (X1600 series) and the high-end (X1800 series).

The common ground

Before we check into each specific chip let us look at the things that all cards have:

· All chips are built with a 90nm process technology

· All chips have full SM3.0 support

· All cards have AVIVO support

With the X1000 series ATI has decided to focus a lot on the shader performance. They have de-coupled the components of the rendering pipeline to make the GPU design much more flexible.

Let’s start with the low-end, the X1300 series.

X1300

Being the low-end solution this chipset probably doesn’t interest most hardcore gamers but it is finally time for ATI to bring full Sm3.0 support even for their low-end cards. The card of course also has the other new improvements of the architecture that we will speak of a bit later in this article.

· 4 pixel shader processors (1 quad)

· 2 vertex shader processors

· 4 texture units

· 4 render back-ends

· 4 Z-compare units

· 128 Max threads

· 600 MHz engine

· 800 MHz memory

· 256 MB

· Recommended price: $149

Radeon X1300

· 450 MHz engine

· 500 MHz memory

· 1280 MB/ 256 MB

· Recommended price: $99/$129

Radeon X1300 HyperMemory

· 450 Mhz engine

· 1 GHz memory

· 32 MB/128 MB HyperMemory

· Recommended price: $79

One interesting thing about the X1300 chips is that it is the first chip that is CrossFire capable without a special chip. This means you can hook up two X1300 cards right away without having to buy a special CrossFire edition X1300.

The X1300 will be available from the 10th of October.

X1600

· 12 pixel shader processors (3 quads)

· 5 vertex shader processors

· 4 texture units

· 4 render back-ends

· 8 Z-compare units

· 128 Max threads

· 590 Mhz engine

· 1.38 GHz memory

· 128 MB/256 MB

· Recommended price: $199/$249

X1600 PRO

· 500 MHz engine

· 780 MHz memory

· 128 MB/256 MB

· Recommended price: $149/$199

This chipset probably is one of the more interesting one for people who want a lot of performance without the price.

The X1600 will be available end of november.

X1800

· 16 pixel shader processors (4 quads)

· 8 vertex shader processors

· 16 texture units

· 16 render back-ends

· 16 Z-compare units

· 512 Max threads

X1800XT

· 625 Mhz engine

· 1.5 GHz memory

· 256 MB/512 MB GDDR3

· Recommended price: $499/$549

X1800XL

· 500 Mhz engine

· 1.0 GHz memory

· 256 MB GDDR3

· Recommended price: $449

The X1800 chips help ATI once again beat NVIDIA and become the king of the Hill (although I am quite certain we’ll hear about NVIDIA’s response quite soon again).

The X1800XL will be available from the 10th of October and then X1800XT from the 1th of October.

Shader Model 3.0 support at last

While NVIDIA included SM3.0 support in the GeForce6 chips ATI decided to stay at SM2.0 support, albeit with some added features. Their reason at that time was that it simply wasn’t worth it at that time (X800 preview, https://bjorn3d.com/read.php?cID=457). In hindsight they have been correct that the added PS3.0 support for the GeForce6 series hasn’t exactly given it any big advantage and that it is just now the gaming scene is starting to move to more PS3.0 support. On the other hand more and more games now add support for SM3.0 so we’ll still don’t know how good the X8xx series will run those games compared to if they would have had SM3.0 support. I still applaud NVIDIA for taking the first step since we need companies to move forward and when ATI now introduces SM3.0 support in all their cards we finally are taking the first step towards a future where every card has SM3.0 support.

Of course ATI cannot just claim to add SM3.0 support, that would make it too easy for NVIDIA to say ATI is just following them. ATI instead claim to have done “SM3.0 done right”. I leave you to see through all the PR-boasting and judge how good Ati’s SM3.0 support really is.

The key features in the SM3.0 support are:

· Dynamic Flow Control – branshing (IF…ELSE), looping and subroutines

· 128-bit Floating Point Processing for both pixel and vertex shaders

· Longer shaders – billions of instructions possible with flow control

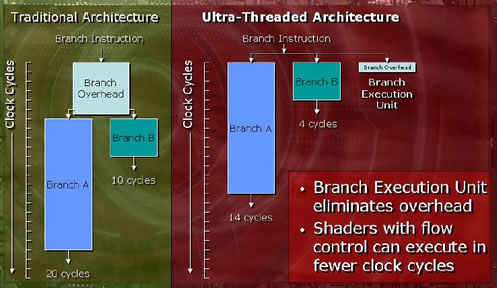

Dynamic Flow Control

This allows different paths through the same shader to be executed on adjacent pixels. It can provide significant optimization opportunities. It can however interfere with parallelism creating redundant computation that can reverse any benefits of the flow control.

To get the best flow control ATI has added something they call Ultra-threading. The benefits of Ultra-Threading are that it hides texture fetch latency and minimizes shader idle time and wasted cycles.

Ultra-Threading means:

· Large Scale Multi-threading

– Hundreds of simultaneous threads across multiple cores.

– Each thread can perform up to 6 different shader instructions on 4 pixels per clock cycle

· Small Thread sizes

– 16 pixels per thread in Radeon X1800

– Fine-grain parallelism

· Fast Branch Execution

– Dedicated units handle flow control with no ALU overhead

· Large Multi-Ported Register arrays

– enables fast thread switching

ATI has tried to explain the advantages of these points through some examples. I’ll let you read and create your own opinion.

128-bit Floating Point Processing

The X1000 series now include 1280-bot floating point precision. The advantage of this is:

· Optimal performance without reducing precision

– General Purpose Registry array has ample storage and read/write bandwidth

– All shader calculations use 128-bit floating point precision, at full speed

· Effective handling of non-pixel operations

Examples:

– Vertex processing – render to vertex buffer

– Parallel data processing – off-loading work from CPU

· Mainstains precision in long shaders

The new memory controller

The next improvement in the X1000 series is the memory controller. There’s a few key features with the new controller:

· Support for today’s fastest graphics memory devices as well as being software upgradeable to support the future.

· 512-bit Ring Bus – this simplifies the layout and enables extreme memory clock scaling

· New cache design – Fully associative for more optimal performance

· Improved Hyper-Z – better compression and hidden surface removal

· Programmable arbitration logic – Maximizes memory efficiency and can be upgraded via software

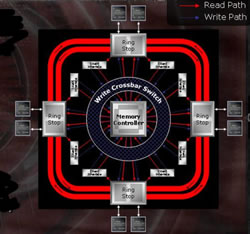

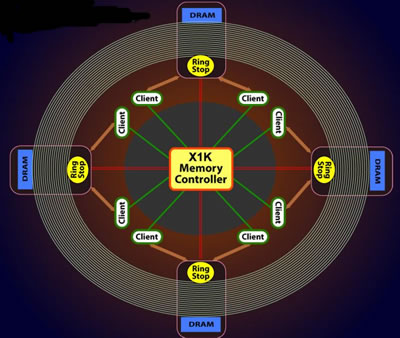

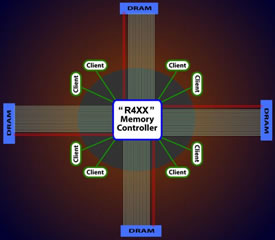

The Ring Bus

Before we start looking at what this is, just take a look at the pictures. Isn’t it a cool design/layout?

The Ring Bus is built up by two internal 256 bit rings. They run in opposite directions to minimize latency. They return the requested data to the client and writes memory using the crossbar. The whole layout minimizes routing complexity since the wiring is done around the chip. This in theory permits higher clock speeds. Below is 2 pictures comparing the memory control or the R4xx chips and the new Ring Bus controller.

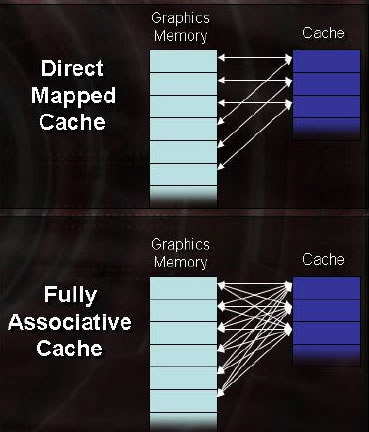

New cache design

The new cache design helps reduce memory bandwidth requirements as well as minimizes cache contention stalls. ATI claims up to 25% gains clock for clock in fill/bandwidth bound cases.

Improved Hyper-Z

The hierarchical Z buffer has been improved and catches up to 60% more hidden pixels than the X850. Using new techniques that uses floating point for improved precision does this.

The Z-compression also has been improved providing higher compression ratios.

All these new features in the memory controller show the most benefit in the most bandwidth-demanding situations (high resolutions of 1600×1200 and higher, anti-aliasing, anisotropic filtering as well as HDR).

Image quality

New technology behind the hood isn’t worth anything if the output is worsened. ATI of course claim that they not only keep the same high image quality they are know of but even have some new tricks up their sleeve. From what I’ve seen they are quite right.

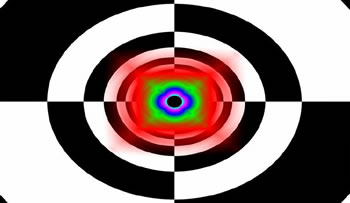

HDR with anti-aliasing

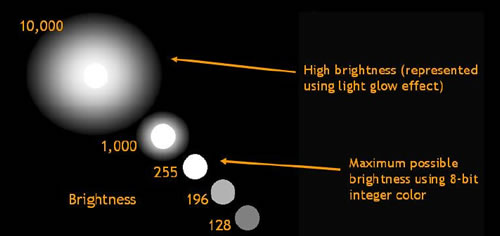

Lately there has been a lot of talk about HDR. HDR stands for High Dynamic Range and defines the ratio between the highest and lowest value that can be represented. Most displays can only recognize values between 0 and 255 (i.e. 8 bits per colour component). Below is a idea on how you can use shaders to create a illusion of a higher brightness and take an advantage of >8 bits over colour component.

We know NVIDIA supports HDR so what is the big deal you might ask? Well, ATI supports HDR together with anti-aliasing. So far they are alone doing this. It means that you don’t have to choose between the more realistic colours or an anti-aliased image. ATI showed us some examples from Far Cry (using an unofficial patch) that supported the X1000 series and the difference was very noticeable. Not only did the HDR-version look better but when they turned on AA you also got rid of all the ‘crawling ants’ on trees that were swaying in the wind.

The X1000 series supports a wide range of HDR formats for flexibility:

· 64-bit (FP16, Int16) – maximum range

· 32-bit (Int10) – maximum speed

· Custom formats for optimal performance.

Below is a comparison in Far Cry between an image without HDR/FSAA and with HDR/FSAA.

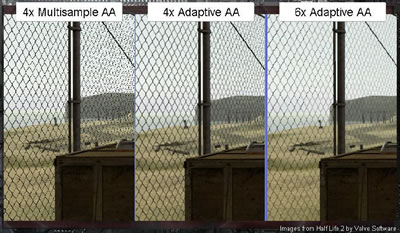

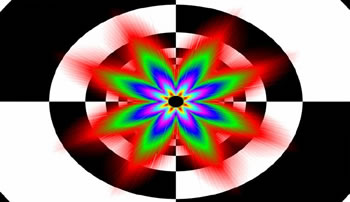

Adaptive Anti-Aliasing

The X8xx series introduced temporal AA and the X1000 series now introduces adaptive anti-aliasing.

Adaptive anti-aliasing:

· Combines image quality of supersampling with speed of multisampling

· Most visible improvements on partly transparent surfaces (foliage, fences, grates etc.)

The problem as explained by ATI is this: Some stuff in games might look like they are made up of lots of smaller polygons although they in fact are made up of a large texture. See the image below for an example. Since the fence in the example below is made up of a texture it isn’t anti-aliased with the traditional multisample AA. By using adaptive anti-aliasing you see that the fence suddenly is being anti-aliased just like the rest of the image.

All the AA-modes of course work with HDR. I tried the new adaptive AA in the Call of Duty 2 demo and it worked really well. When you drive into the little town there are some fences and a door with metal bars in it behind the truck where to disembark. Even with 6xAA and 16x AF these look pretty bad with some aliasing. With the new AA-mode they look a lot better.

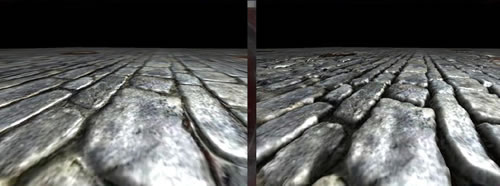

Improved Texture Filtering

Ati has introduced a new anisotropic filtering mode with the X1000 series: Area aniso. They also have increased the triilinear filtering precision and have sharper clearer textures.

Normal 16x Anisotropic Filtering on the X1800XT

High quality 16x Area-Anisotropic filtering

An image from 3Dmark05’s anisotropic filtering text probably shows the best how the new mode works. We will later test how much of a performance hit you can expect with this mode.

Parallax Occlusion Mapping

I just had to talk a bit about this since I think it was a pretty cool new effect that ATI now can use. It is called Parallax Occlusion mapping and I won’t go into the theory behind it. Quite frankly it manages to create a depth in a texture even if you look at it from different angles.

· Per-pixel ray tracing at its core

· Corrrectly handles complicated viewing phenomena and surface details

– displays motions parallax

– renders complex geometrix surfaces such as displaced text/sharp objects

– uses occlusion mapping to determine visibility for surface features 8resulting in correct self-shadowing)

– uses flexible lighting model

There’s of course a lot more to it and ATI for instances use the dynamic flow control in the pixel shader to dynamically adjust the sample rate per pixel.

The end result can been seen here:

The future of Catalyst

The new Catalyst will of course support all the new features of the X1000 series including the new anti-aliasing modes and the new anisotropic filtering mode. Crossfire support will be included although they still seem to be against opening up to the user to select the kind of Crossfire-support a game should use.

All the new cards will have overdrive support that means you will be able to overclock directly from the CCC. An asked for feature has been added which allows you to throttle down the clock setting when doing 2D work.

In the near future we will also see new drivers that support multi-core CPU’s and can take advantage of the “second CPU”:

· Off-load vertex processing

· Off-load shader compiler operations

· True multi-tasking – TV/PVR/DVD on one display while running 3D or office apps on another

Since the X1000 series supports H.264 decoding the Catalys of course will include support for that. A decode should be available shortly after launch.

And now for something completely different – the GPU as a CPU

I really have to write a bit about this since to me this was a very interesting presentation made by Mike Houston from Stanford University. Basically he talked about the work that is being done on using the GPU for general-purpose computations.

I wish I could write an interesting piece on this but it was all pretty complicated and yet very interesting. I suggest you head over to http://graphics.standford.edu/~mhouston and read more about it.

The conclusion – the X1800 really kicks the GeForce 7800GTX in most cases. Still, Mike did hope that ATI and NVIDIa would start thinking about this kind of application when they develop future chips since there still is much that can be better. But who knows – in the future maybe it will be a Radeon 3800 or a GeForce 9900GTX that assists in finding the cure for cancer.

The benchmarks

I know you have all been waiting for this. Not only did we get a full day to benchmark the X800XT on, I also was lucky to be able to bring home some of the new cards with me home and continue to do benchmarks on them on my own system (note to myself, bring motherboard, CPU and harddrive with me to next event). This means I have several scores below taken both from the system at the event and from home. Unfortunately I didn’t have a GeForce 7800GTX at home so for the comparison between the X1800XT and the Geforce7800GTX I will use the ATI system.

Due to limited time before this article had to go online I choose to only run benchmarks on the high-end cards. In a few days we will follow up with an article about the performance of the X1300 and the X1600 and compare it to the X800GT, X800Gto and the GeForce 6600GT.

Before I’ll move to the scores a big thanks to Anton from Nordic Hardware, Ozkan from PC Labs in Turkey and Thomas from HardwareOnline.dk. Together we hogged a PC during both Saturday and the whole of Sunday and together managed to get a whole bunch of scores. Also thanks to Öjvind from sweclockers.com who lent us a Leadtek GeForce 7800GTX so we could get some comparative scores.

The systems

· Sapphire CrossFire Edition Mainboard

· Athlon64 4000+

· 1 GB Kingston HyperX

· Seagate Barracuda 7200.7 160 GB SATA

· Enermax 600W

· Samsung SH-D162 DVD Drive

· Windows XP SP2

· ForceWare 78.03

· ATI Catalyst 8.173

· 512 MB X1800XT, 256 MB X1800XL and Leadtek GeForce 7800GTX (old bios, not overclocked)

This system was a bit unstable and we also noticed that the timings for the memory were set pretty slow (cas 2, 7-3-3-2). I’m sure with a decent nForce4 motherboard we could have gotten even better scores.

The Bjorn3D system

· Asus nForce 4 Sli Deluxe mainboard

· Athlon X2 4400+

· 1 GB OZ memory

· Various SATA harddrives (Matrox, Western Digital)

· Windows XP SP2

· Ati Catalyst 5.9 (for non X1000 cards)

· ATI Catalyst 8.173 beta (for X1000 cards)

· ForceWare 81.81 beta

The cards tested on this system were: 512 MB X1800XT, 256 MB X1800XL, 256 MB X850XT, 512 MB X800XL and 256 MB GeForce 7800GT. A few scores from a XFX GeForce 7800GTX was also included since they were run on this system a few weeks ago. These scores were done with an older ForceWare driver.

Benchmarking isn’t always fun ..

Benchmarks – 3Dmark03 and 3Dmark 05

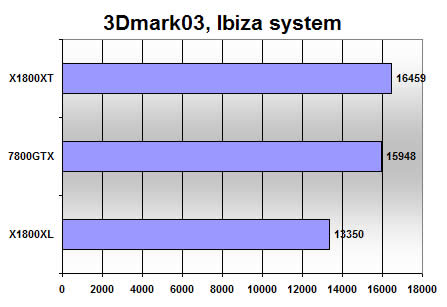

3Dmark03

We included this benchmark since it could be interesting to see how the new cards will handle older games.

How we benched it: We did run the default benchmark and wrote down the scores.

There’s a 3% difference between the GeForce 7800GTX and the X1800XT and that is almost inside the margin of error. As we could expect the two top cards have no problems with the benchmark. The X1800XL fall about 19% behind the X1800XT.

3Dmark05

Although we soon can expect a new version with more SM3.0 support this benchmark still is an interesting tool to use to gauge the performance of the different cards.

How we benched it: We did run the default benchmark and wrote down the scores.

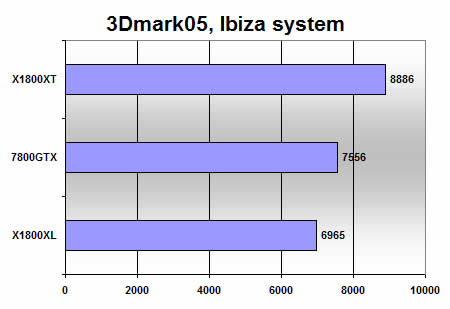

Let us first take a look at the Ibiza system:

The X1800XT scores almost 18% better than the GeForce 7800GTX on this system. The X1800XL is actually not far behind the GeForce 7800GTX and is roughly 22% slower than the X1800XT.

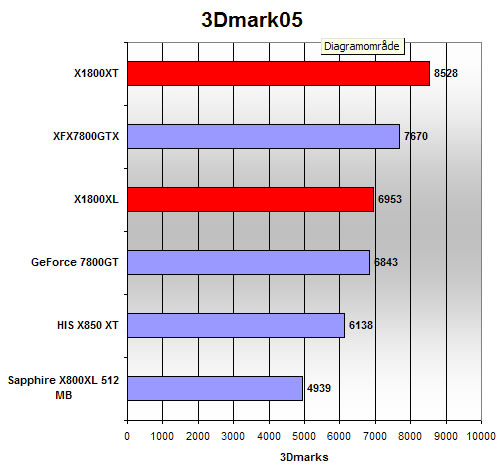

If we switch to our main system the results are similar:

The X1800XT is about 11% faster than the GeForce 7800GTX in this system. The X1800Xl and the GeForce 7800GT are almost tied while leaving the X850XT and the 512 MB X800XL behind.

Benchmarks – Doom 3 and Far Cry

Doom 3

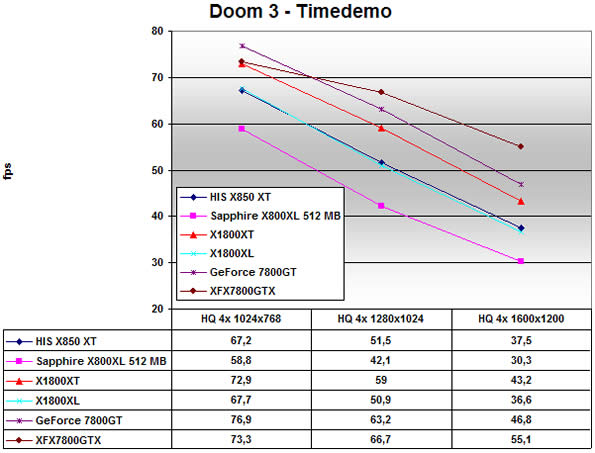

As the only OpenGl game in our testing suit Doom3 is used to test the speed of the OpenGL implementation, an area where NVIDIA traditionally has been strong.

How we benched it: Two sets of demos were used. Demo1 is the built in demo and the HOL-demo is a demo created by hardwareonline.dk. In the Bjorn3D-system we let the filtering be set through Doom 3’s HQ-setting. In the HOL-demo we forced 16 AF through the drivers. Each run was made several times to remove any slow scores due to caching. Doom 3 was updated with the latest patch.

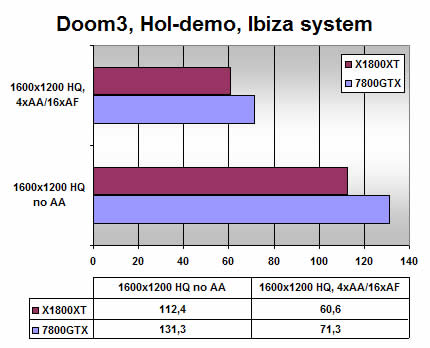

First we look at the Ibiza system:

Ouch! ATI apparently still has a lot of work to do on their OpenGL drivers. The GeForce 7800GTX is 17% faster both with and withouth AA.

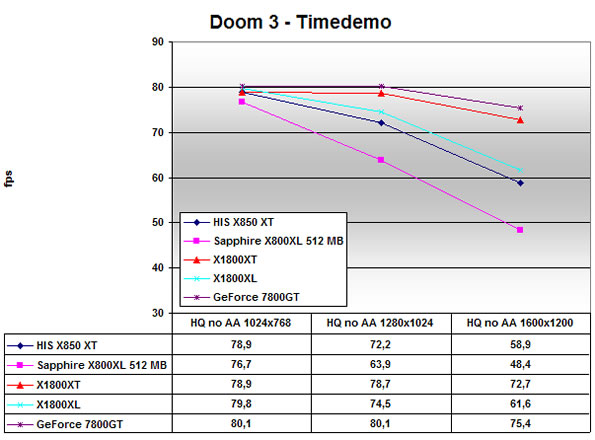

As you can see this is confirmed by the run in our main system. The X1800XT gets beaten even by the GeForce 7800GT.

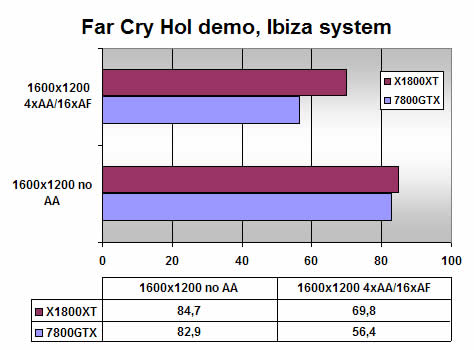

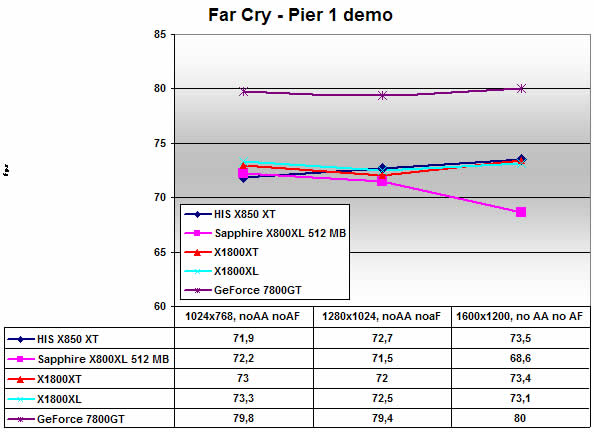

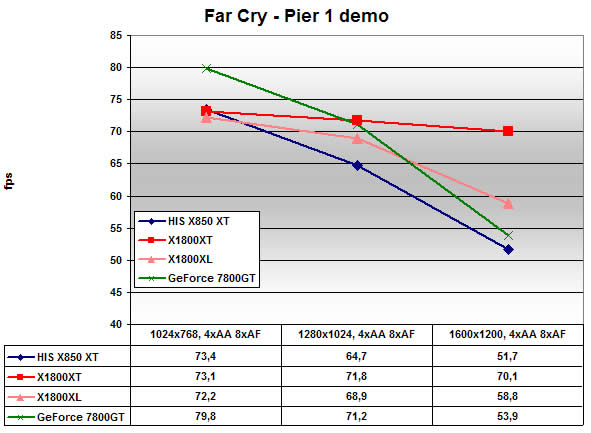

Far Cry

Even though the game is over a year old it still is a stunning looking game. No wonder it is popular to use for benchmarking.

How we benched it: In our main system we used the Pier 1 demo (the one you fly with a glider). In the Ibiza system we used a demo from hardwareonline.dk. AA and AF were set from the drivers. Everything else was set to max. The patch 1.33 was used. HDR was not used. We used Benchemall to run up to 7 times in each run and then took the results from the last 3-4 runs and got an average score.

In the Ibiza system the X1800XT manages to stay ahead both with and without AA/AF. When you turn on AA/AF the X1800XT speeds ahead ending almost 24% faster.

Oddly enough the GeForce 7800GT scores much higher as long we don’t have any AA/AF turned on. It looks like all cards are relatively Cpu-bound without AA and AF (except the X800XL at 1600×1200) so I’m not sure why the GeForce 7800GT manages to score 7 fps better (about 10%). As we turn on AA and AF the normal order though is restored with the X1800XT crushing all the other cards and even the X1800XL beating the GeForce 7800GT. The former high-end ATI card, the X850XT is last almost 20 fps behind the new king, the X1800XT, at 1600×1200.

Benchmarks – Call of Duty 2 demo and Serious Sam 2 demo

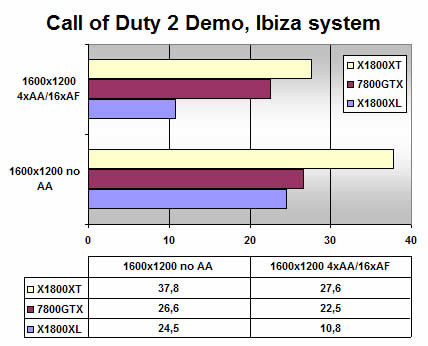

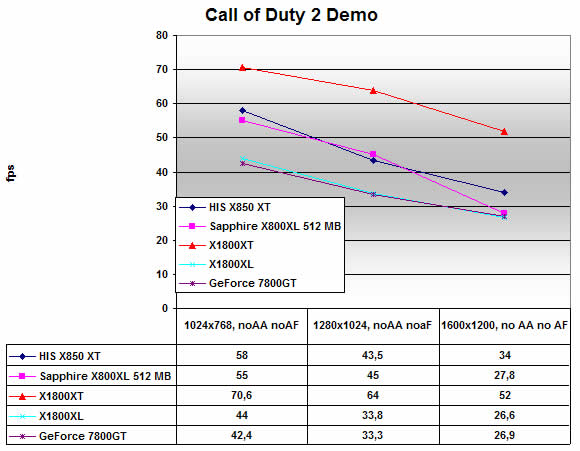

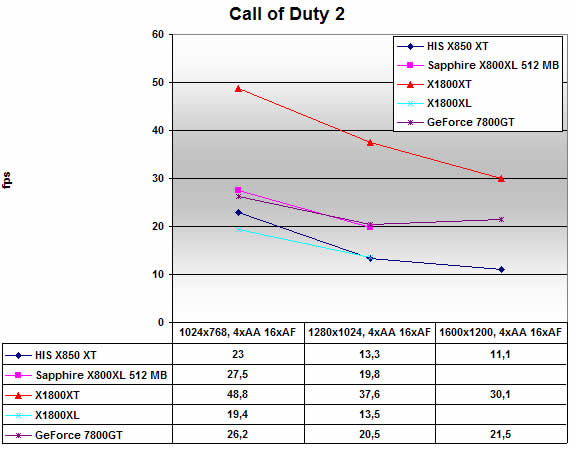

Call of Duty 2 demo

The pre release demo of Call of Duty 2 was release a few days before the even. It looks just as cool as the original Call of Duty did and we of course had to include it to see what these cards could do with a brand new game.

How we benched it: Since Call of Duty 2 doesn’t have a timedemo feature built in we used Fraps to record the fps. We played through the game for 90 seconds from the start making sure we tried to move and do the same stuff every time. This was done several time for each setting to make sure we could get a relatively accurate average, at least as good you can get when using fraps. AA and AF were set from the drivers. Everything in the demo was set at its highest possible level.

As you can see there is no competition here. The X1800XT crushes the two other cards. With no AA/AF even the X1800Xl can almost keep up with the GeForce 7800GTX but as we turn on AA/AF it falls behind.

From these scores it is obvious the ATI cards work well in this game. I had some issues with some of the cards (X1800XL, the X800XL and possibly even the GeForce 7800GT9 where the 1600×1200 4xAA/16xAF scores went up from 1280×1024. It looks like the game somehow decides to turn of/down either AA or AF due to some reason.

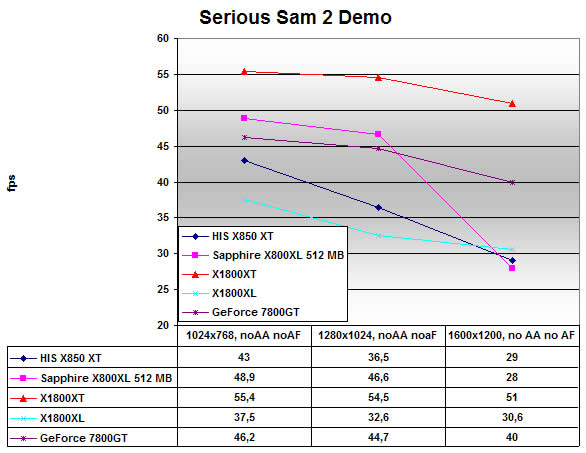

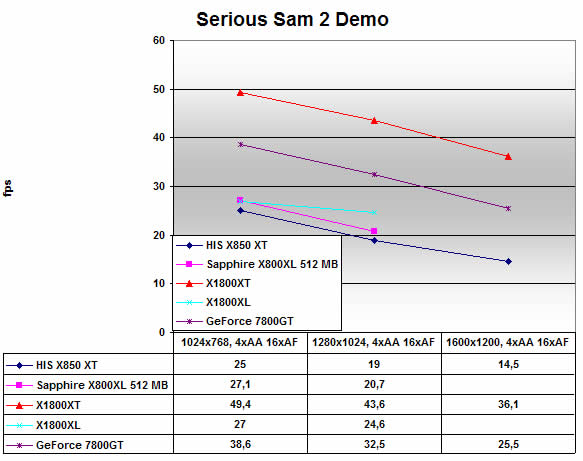

Serious Sam 2 demo

Who couldn’t love the first Serious Sam game? A game that required no real thinking and just was a mindless funny first person shooter. Recently a demo of the sequel was released. It features even more monsters and shiny bright graphics.

How we benched it: We recorded a 155 second long demo where we played the game as usual. This was then replayed and Fraps was used to measure the fps during the playback. As far as we can see the framerate seems to be correct (compared to manual runs). AA and AF were set in the drivers. Everything was set to its highest setting except HDR, which was turned off.

In the regular scores without AA and AF the X1800XT of course dominates. It’s odd to see the 512 MB X800Xl scoring so well but maybe it is the extra memory that helps? In the end it falls away and the X1800XT, GeForce 7800GT and the X1800Xl is the top three performers at 1600×1200.

Turning on AA and AF shows us no surprises except for a similar problem as in Call of Duty 2 where the X1800Xl and the X800Xl seem to run with lower or no AA/AF at 160×1200 since the scores went dramatically up from 1280×960.

Benchmarks – FEAR SP demo

FEAR SP demo

At Ibiza we also had the opportunity to run the FEAR SP demo and bench it. This is yet another promising game that seems to need lots of performance.

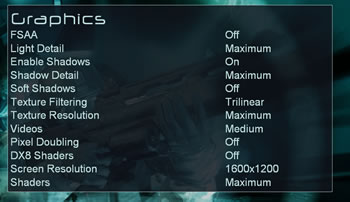

How we benched it: Using Fraps we did run through the game several times at each setting/resolution and recorded the average framerate. We tried to move the same in every run and each setting was tested several times. The settings were set as the following images show.

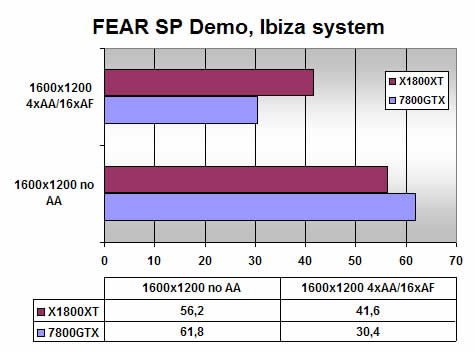

Without any image-enhancing techniques the GeForce 7800GTX beats the X1800XT at 1600×1200, although with a small margin. Turn on AA/AF and suddenly the X1800XT beats the GeForce 7800GTX with a huge 36% margin. Not bad.

Benchmarks – AA and AF performance

How does Anti-aliasing affect the performance?

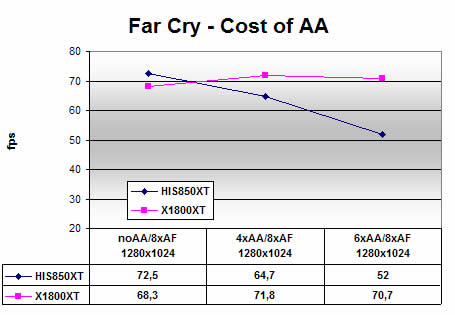

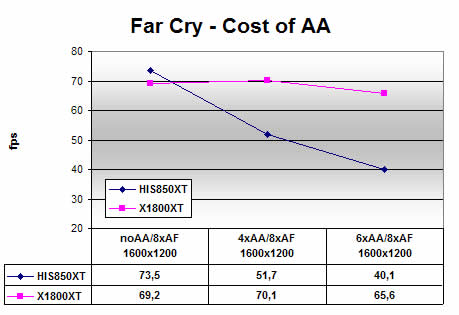

I decided to do a quick test to see how turning on AA affected the overall performance with the X1800XT. I used Doom 3 (demo1) and Far Cry (pier 1) and run it with various AA-levels.

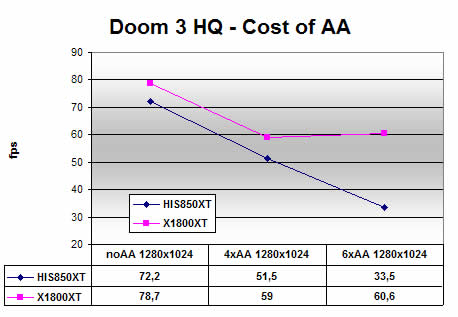

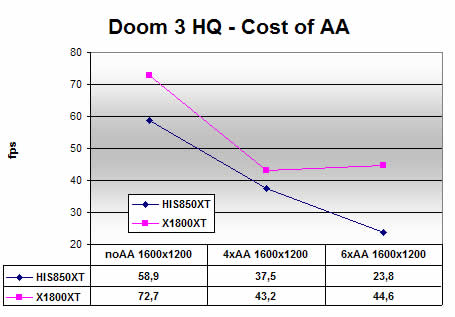

In Doom 3 both the X850XT and the X1800XT suffer about 25-40% going from no AA to 4xAA at both 1280 and 1600. However, as you go from 4xAA to 6xAA you suddenly see that the X1800XT simply isn’t affected at all while the X850XT continues to loose performance. Basically it looks like 6xAA is “free”.

In Far Cry the X1800XT don’t even loose any performance going from no AA to 4xAA and it isn’t until you turn on 6xAA at 1600×1200 you see a minor decrease. The X850XT on the other hand drops 11-30% each step.

I did turn on the new adaptive AA in Call of Duty 2 at 1600×1200 4xAA/16xAF to see how it worked (very well actually) as well as a quick performance check. While I get 30.1 fps at 1600×1200 4xAA/16xAF I get 26,4 fps when turning on the adaptive AA. That is a 12% decrease in performance.

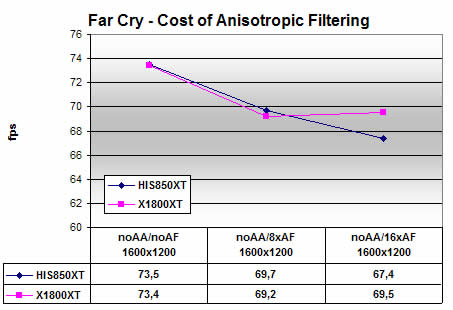

How does Anisotropic Filtering affect the performance?

Unfortuantely I choose to do an Anisotropic Filtering test on the game Far Cry. It runs out it is a bit to CPU-limited to be a good choice. In the follow-up article to this one I will use some other games to investigate more.

The X850XT looses about 3-5% in each step while the X1800XT looses around 6% in the first step and then nothing more.

Turning on the new high quality anisotropic filtering doesn’t seem to give more than a few % decrease in performance but we will get back to testing this in more detail in the follow-up article.

Overclocking

ATI had brought some overclocking guys, Macci and Sampsa, with the purpose of seeing if how far they could overclock the X1800XT. To aid them they brought dry ice and about 400l of liquid nitrogen.

It was quite funny to see then work and cool the poor X1800XT card. In the end they managed to cram out 12164 in 3Dmark. Here’s some info from them:

3DMark05 score = 12164

Driver settings = performance mode in CCC

CPU speed = 3.59GHz (1.74V)

System Memory = 239 MHz 2-2-2-5 (3.42VMushkin Redline)

Hyperstransport Speed = 239 x 5 -> 1.2GHz

Engine clock = 864 MHz (programmed the core voltage regulator with a value of “a”. I tried increasing the voltage more by going to “9” and the board immediately dies…is it supposed to do that????)

Memory clock = 990 MHz (programmed regulator for MVDDC and MVDDQ to “bf”)

Conclusion

There’s been a lot of speculation on the net that ATI was having issues with the R5xx and that this could become ATI’s “GeForce5”. ATI actually admitted that the reason the cards are late is due to a very hard to find bug that, while it was easy to find, took long to find. This is also the reason why the X1600 is coming later than the others. They made a decision to hold up the chip while they were working on finding the bug in the X1800.

From what we seen so far the X1000 series definitely is a huge step forward for ATI. They finally have not just one card but also a whole family of SM3.0 supporting cards. Performance is overall excellent although I am disappointed with the OpenGL Doom 3 performance. Time will tell if this is due to beta drivers or a bug.

In a few days we will follow this article with more benchmarks, this time from the low and mid-ends cards.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996