The new XFX GTX-285 XXX 55 nm shrunk die is out, we have one of these beasts on the test bench and we’re going to drive it until we run out of gas.

INTRODUCTION

There has seldom been a time in GPU history that has been more exciting. New more powerful GPU’s are popping up all over the place. Last year we saw the introduction of the 4870, 4870×2, GTX-260, GTX-260 216, GTX-280, and this year already we’ve seen the GTX-295 and now the XFX GTX-285 XXX. That’s more excitement than we’ve seen in a long time. Combine that with the Core i7 introduction and toss one of those puppies on a good board with the Intel Core i7 965 Extreme and you’ve got heart pumping graphics madness.

It’s hard to get past the excitement of a new GPU hitting the door. Especially one that just released and boasts better performance than it’s brother the GTX-280. The GTX-280 found its way into our hearts and in a short time became a legend in its own right. We expect no less from its younger sibling the XFX GTX-285 XXX.

XFX: The Company

Founded in 1989, PINE Technology designs, develops, manufactures and distributes high-quality digital audio and video devices as well as computer peripherals. PINE also distributes branded computer and communications products. The company’s movement into areas such as global B2B eCommerce and software development have helped keep it ahead of the competition. Today, PINE enjoys strategic alliances with companies such as Dell, NEC, Microsoft, Panasonic, Phillips, Ricoh, Samsung, Ingram Micro, Intel, Quantum, Connertech, Pioneer and Fujitsu.

Headquartered in Hong Kong, PINE Technology has more than 1,000 employees worldwide, with 16 offices around the globe, four research and development centers strategically located in the Asia Pacific region, and two factories in China.

Production output at PINE’s factories exceeds an impressive 500,000 units of PC components and I.A. appliances per month. However, our line flexibility enables us to switch lines within a scant four hours to expand capacity to a staggering one million board-level products per month.

XFX, otherwise known as PINE Technologies, is a brand of graphics cards that have been around since 1989, and have since then made a name for themselves with their Double-Lifetime enthusiast-grade warranty on their NVIDIA graphics adapters and matching excellent end-user support.

XFX dares to go where the competition would like to, but can’t. That’s because, at XFX, we don’t just create great digital video components–we build all-out, mind-blowing, performance crushing, competition-obliterating video cards and motherboards. Oh, and not only are they amazing, you don’t have to live on dry noodles and peanut butter to afford them.

Features & Specifications

FEATURES

|

PCI Express 2.0 GPU |

|

|

| GPU/Memory Clock at 670/1250 MHz!! |

||

| HDCP capable | ||

| 1024 MB 512-bit memory interface for smooth, realistic gaming experiences at Ultra-High Resolutions /AA/AF gaming | ||

| Support Dual Dual-Link DVI with awe-inspiring 2560×1600 resolution | ||

| The Ultimate Blu-ray and HD DVD Movie Experience on a Gaming PC | ||

| Smoothly playback H.264, MPEG-2, VC-1 and WMV video–including WMV HD | ||

| Industry leading 3-way NVIDIA SLI technology offers amazing performance | ||

| NVIDIA PhysX™ READY | ||

|

PCI Express 2.0 Support Designed to run perfectly with the new PCI Express 2.0 bus architecture, offering a future-proofing bridge to tomorrow’s most bandwidth-hungry games and 3D applications by maximizing the 5 GT/s PCI Express 2.0 bandwidth (twice that of first generation PCI Express). PCI Express 2.0 products are fully backwards compatible with existing PCI Express motherboards for the broadest support. |

|||

| 2nd Generation NVIDIA® unified architecture Second Generation architecture delivers 50% more gaming performance over the first generation through enhanced processing cores. |

|||

| GigaThread™ Technology Massively multi-threaded architecture supports thousands of independent, simultaneous threads, providing extreme processing efficiency in advanced, next generation shader programs. |

|||

| NVIDIA PhysX™ -Ready Enable a totally new class of physical gaming interaction for a more dynamic and realistic experience with Geforce. |

|||

| High-Speed GDDR3 Memory on Board Enhanced memory speed and capacity ensures more flowing video quality in latest gaming environment especially in large scale textures processing. |

|||

| Dual Dual-Link DVI Support hardwares with awe-inspiring 2560-by-1600 resolution, such as the 30-inch HD LCD Display, with massive load of pixels, requires a graphics cards with dual-link DVI connectivity. |

|

||

| Dual 400MHz RAMDACs

Blazing-fast RAMDACs support dual QXGA displayswith ultra-high, ergonomic refresh rates up to 2048×1536@85Hz. |

|||

| Triple NVIDIA SLI technology Support hardwares with awe-inspiring 2560-by-1600 Offers amazing performance scaling by implementing AFR (Alternate Frame Rendering) under Windows Vista with solid, state-of-the-art drivers. |

|

||

|

Click to Enlarge

|

|||

| Microsoft® DirectX® 10 Shader Model 4.0 Support

DirectX 10 GPU with full Shader Model 4.0 support delivers unparalleled levels of graphics realism and film-quality effects. |

|||

| OpenGL® 2.1 Optimizations and Support Full OpenGL support, including OpenGL 2.1 . |

|||

| Integrated HDTV encoder Provide world-class TV-out functionality up to 1080P resolution. |

|||

| NVIDIA® Lumenex™ Engine Support hardwares with awe-inspiring 2560-by-1600Delivers stunning image quality and floating point accuracy at ultra-fast frame rates.

|

|

||

| Dual-stream Hardware Acceleration

Provides ultra-smooth playback of H.264, VC-1, WMV and MPEG-2 HD and Bul-ray movies. |

|||

| High dynamic-range (HDR) Rendering Support

The ultimate lighting effects bring environments to life. |

|||

| NVIDIA® PureVideo ™ HD technology

Delivers graphics performance when you need it and low-power operation when you don’t. HybridPower technology lets you switch from your graphics card to your motherboard GeForce GPU when running less graphically-intensive applications for a silent, low power PC experience. |

|

||

| HybridPower Technology support Delivers graphics performance when you need it and low-power operation when you don’t. HybridPower technology lets you switch from your graphics card to your motherboard GeForce GPU when running less graphically-intensive applications for a silent, low power PC experience. |

|||

| HDCP Capable

Allows playback of HD DVD, Blu-ray Disc, and other protected content at full HD resolutions with integrated High-bandwidth Digital Content Protection (HDCP) support. (Requires other compatible components that are also HDCP capable.) |

|

||

GPU Comparison Table Reference Design

| GPU Comparison Table |

||||||

| Nvidia GeForce GTX 295 | Nvidia GeForce GTX 285 | Nvidia GeForce GTX 280 | Nvidia GeForce GTX 260 Core 216 | ATI Radeon HD4870 X2 | ATI Radeon HD4870 1GB | |

| GPU | 2x GT200b | GT200b | GT200 | GT200/GT200b | R700 XT | R700 |

| Process (nm) | 55 | 55 | 65 | 65/55 | 55 | 55 |

| Core Clock (Mhz) | 576 | 648 | 620 | 576 | 750 | 750 |

| Shader Clock (Mhz) | 1242 | 1476 | 1296 | 1242 | 750 | 750 |

| Memory Clock (Mhz)* | 999 | 1242 | 1107 | 999 | 900 | 900 |

| Memory Size (MB) |

1792 (2x 896) |

1024 | 1024 | 896 | 2048 (2x 1024) |

1024 |

| Memory Type | GDDR3 | GDDR3 | GDDR3 | GDDR3 | GDDR5 | GDDR5 |

| Unified Shaders/ Stream Processors | 480 (2x 240) |

240 | 240 | 216 | 800 | 800 |

| Texture Mapping Units | 160 (2x 80) | 80 | 80 | 74 | 80 (2x 40) | 40 |

| Raster Operation Units | 56 (2x 28) | 32 | 32 | 28 | 32 (2x 16) | 32 |

| Memory Interface (bits) |

896 (2x 448) |

512 | 512 | 448 | 512 (2x 256) |

256 |

| Outputs (on reference design) | 2x Dual-Link DVI 1x HDMI |

2x Dual-Link DVI S-Video |

2x Dual-Link DVI S-Video |

2x Dual-Link DVI S-Video |

2x Dual-Link DVI S-Video |

2x Dual-Link DVI S-Video |

| Power Connectors | 1x 6-pin, 1x 8-pin | 2x 6-pin | 1x 6-pin, 1x 8-pin | 2x 6-pin | 1x 6-pin, 1x 8-pin | 2x 6-pin |

| Thermal Design Power (w) | 289 | 183 | 236 | 182 | 286 | 150 |

| Estimated Retail Price (USD) | 499 | 399 | 360 | 289 | 449 | 247 |

XFX GTX-285 XXX Specific Specifications

|

XFX GTX-285 XXX |

|||

|

Bus Type

|

PCI-E 2.0 | ||

|

Performance

|

XXX | ||

|

GPU Clock MHz

|

670 MHz | ||

|

Shader Clock (MHz)

|

1508 MHz | ||

|

Stream Processors

|

240 | ||

|

Texture Fill Rate (billion/sec)

|

77.76 – 82.8 Billion/Sec | ||

|

Pixels per clock (peak)

|

120 | ||

|

Memory Interface Bus (bit)

|

512 | ||

|

Memory Type

|

DDR3 | ||

|

Memory Size (MB)

|

1024 MB | ||

|

Memory Clock (MHz)

|

2500 (1250 x 2) MHz | ||

|

Output HDCP Capable

|

1 | ||

|

Cooling Fansink

|

√ | ||

|

Max Resolution Analog Horizontal

|

2048 | ||

|

Max Resolution Analog Vertical

|

1536 | ||

|

Max Resolution Digital Hoizontal

|

2460 – 2560 | ||

|

Max Resolution Digital Vertical

|

1600 | ||

|

ROHS

|

√ | ||

|

Dimensions L (Inches)

|

10.5 Inches | ||

|

Dimensions W (Inches)

|

4.376 Inches | ||

|

Dimensions H (Inches)

|

1.5 Inches | ||

|

Dimensions L (Metric)

|

26.7 | ||

|

Dimensions W (Inches)

|

4.376 Inches | ||

|

Dimensions H (Metric)

|

3.8 | ||

|

Profile

|

Double, Standard | ||

|

PhysX™ technology

|

√ | ||

|

CUDA™ technology

|

√ | ||

PICTURES, IMPRESSIONS & BUNDLE

The XFX GTX-285 comes in an attractive box that without the XFX brand name on it you could tell it’s an XFX product. Their packaging is rather distinctive.

The XFX GTX-285 is well protected inside the outer box by another box and when you flip the lid you get a peek at the contents which are still layered in protection.

There inside the box you’ll find your prize, the XFX GTX-285 XXX and bundle. You’ll also find a Do Not Disturb Sign, Install Guide, Quick Setup guide, 2 – Molex to one 6-Pin PCI-E connector, one DVI to VGA connector, One DVI to HDMI connector, a sound cable for use if you want to use HDMI, and an Svideo Adapter. Last but not least (and thank you XFX for including a full game title), FarCry 2. Lately we’ve been noticing full game titles missing from the bundles of GPU’s. The inclusion of FarCry 2 in the bundle just makes it sweeter and goes to show that on top of their double lifetime modder and Overclocker friendly warranty they care about the end user. Breaking in a new GPU on a new game is one of the true joys in life. We’ve been bugging Congress about making game titles mandatory to bundle with GPU’s, but we heard grumblings of PacMan, Pong, and Qbert from them so we figured they were a bit out of touch and will have to resort to choosing Vendors like XFX that still care enough to toss a major full game title in the box. FarCry 2 has been out for a while but who would complain about seeing you come through the door with a game in hand, gifting them with the joy of a killer game title like FarCry 2.

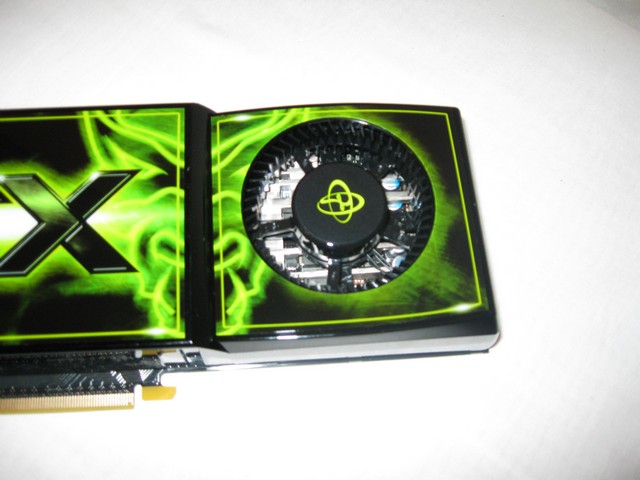

That brings us to this graphics powerhouse itself. There’s nothing like the smell of a new GPU in the morning. It just screams Christmas, Birthday, and losing your Vir******, err Easter, yea that’s it, Easter all in one. Without a doubt, XFX produces one of the best looking GPU’s around. No need for some half dressed game character, Knight in glowing armor, or other gimmick on this label. The sharp looking GTX logo with the XFX brand name above it is enough to make the heart pitter patter just looking at it. We do like the Stylized Green Creature between the logo and fan, but if it had nothing more than the GTX logo with the XFX name above it, we’d still know this is one serious graphics weapon.

The XFX GTX-285 XXX has the standard squirrel cage fan we’ve become accustomed to seeing since the introduction of the 8800GTX a couple of GPU generations back. With the new die shrink from 65nm to 55nm and the reduced power requirements of the 55nm process we wonder if it’s going to be enough to offset the factory overclocked nature of this beast.

We’ve already seen the card from the top but the card just looks so nice standing there we thought we’d throw this one in for the drool factor. You might want to check your case for room though these are full sized cards running 10.5 inches in length. Smaller chassis need not apply.

There’s the top down shot and you can get a peek at the 2 – 6-pin PCI-E and the Triple SLI ready connectors.

The back of the GPU is fairly unremarkable and has a bevy of small stickers on it. Back plates might give a card a little more polished look but retain heat so we’re good with a bare back card.

Standard dual slot cooler design two DVI-D and one S-Video. HDMI is by adapter and though it might be kinda nice to have a built in HDMI port, having one of these beauties connected to a TV just doesn’t seem right. It should be in a gaming shrine and worshiped daily. We tested the HDMI adapter on an LCD monitor we’ve got and everything was kosher there.

There’s a peek at the butt, err rear of the card and you can get a peek at the ventilation at the rear of the card where the squirrel cage fan draws air over the end of the board to keep things nice and cool.

We’ll leave the pictures and impressions page with another drool shot. No scratching through your screen won’t nab you one of these critters but your local e-tailer can help you out.

TESTING & METHODOLOGY

To test the XFX GTX-285 XXX we did a fresh load of Vista 64. We loaded all the latest drivers for the Asus P6T Deluxe motherboard, downloaded the latest drivers for the GPU and applied all patches and updates to the OS. Once we had everything updated and the latest drivers installed we got down to installing our test suite.

Once we had everything up to snuff, we loaded our testing suite, applied all the patches to the games, and checked for updates on 3Dmark06 because it was crashing on load. We found that some update we’d done had knocked out the OpenAl portion of 3Dmark06’s install. We downloaded the OpenAl standalone installer (Thanks Dragon) and ran that which fixed our crash in Vista 64. Then, we went ahead and installed 3Dmark Vantage, stoked up a pot of coffee and we were ready to run. We ran each test at least three times and report the average of the three test runs here. Some runs were made more than three times (the coffee didn’t get done quickly enough), then we cloned the drive using Acronis to protect ourselves from any little overclocking accidents that might happen. Testing was done at the default clock speed of the GPU’s tested unless otherwise noted.

Test Rig

| Test Rig “Quadzilla” |

|

| Case Type | Top Deck Testing Station |

| CPU | Intel Core I7 965 Extreme (3.74 GHz 1.2975 Vcore) |

| Motherboard | Asus P6T Deluxe (SLI and CrossFire on Demand) |

| Ram | Corsair Dominator DDR3 1866 (9-9-9-24 1.65v) 6 GB Kit |

| CPU Cooler | Thermalright Ultra 120 RT (Dual 120mm Fans) |

| Hard Drives | Patriot 128 GB SSD |

| Optical | Sony DVD R/W |

| GPU Tested | XFX GTX-285 XXX BFG GTX-295 Asus GTX-295 EVGA GTX-280 (2) XFX 9800 GTX+ Black Edition BFG GTX-260 MaxCore Leadtek GTX-260 Drivers for Nvidia GPU’s 181.20 Palit HD Radeon 4870X2 Sapphire HD Radeon 4870 Sapphire HD Radeon 4850 Toxic Drivers for ATI GPU’s 8.12 |

| Case Fans | 120mm Fan cooling the mosfet cpu area |

| Docking Stations | Thermaltake VION |

| Testing PSU | Thermaltake Toughpower 1200 Watt |

| Legacy | Floppy |

| Mouse | Razer Lachesis |

| Keyboard | Razer Lycosa |

| Gaming Ear Buds |

Razer Moray |

| Speakers | Logitech Dolby 5.1 |

| Any Attempt Copy This System Configuration May Lead to Bankruptcy | |

Synthetic Benchmarks & Games

| Synthetic Benchmarks & Games | |

| 3DMark06 v. 1.10 | |

| 3DMark Vantage | |

| Company of Heroes v. 1.71 | |

| Crysis v. 1.2 | |

| World in Conflict Demo | |

| FarCry 2 | |

| Crysis Warhead | |

| Mirror’s Edge (Day One Patch) | |

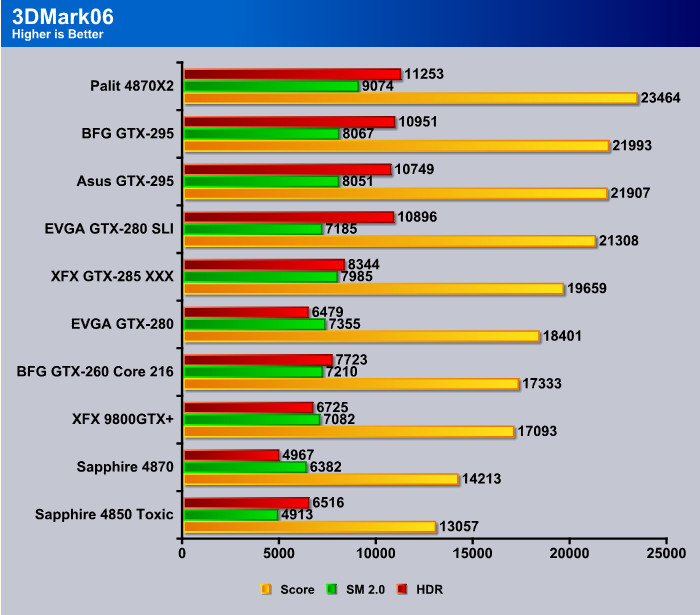

3DMARK06 V. 1.1.0

The XFX GTX-285 did really well coming in on the top of the stack of the single GPU cards. Multiple card configurations did better and dual GPU’s did better but those are different class setups. You can compare the two but for scoring purposes we need to remain in the GPU’s class. The XFX GTX-285 is in the single GPU on a single PCB class and is the fastest single card out there that we have tested to date.

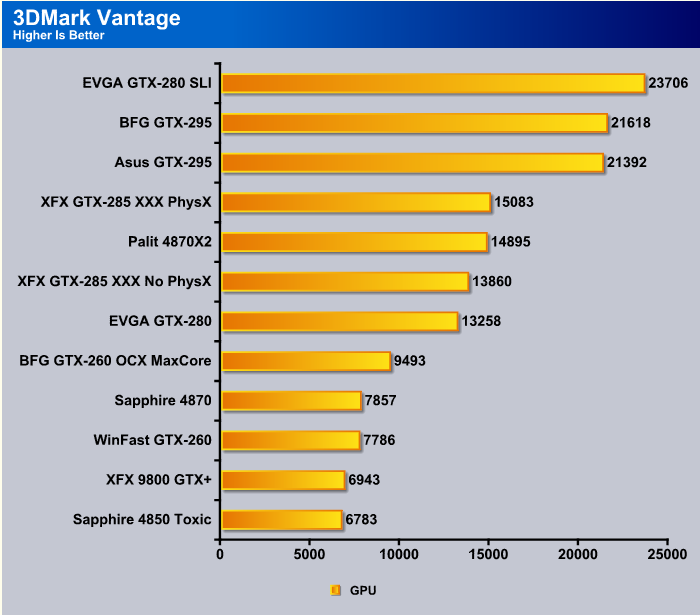

3DMark Vantage

The newest video benchmark from the gang at Futuremark. This utility is still a synthetic benchmark, but one that more closely reflects real world gaming performance. While it is not a perfect replacement for actual game benchmarks, it has its uses. We tested our cards at the ‘Performance’ setting.

Currently, there is a lot of controversy surrounding NVIDIA’s use of a PhysX driver for its 9800 GTX and GTX 200 series cards, thereby putting the ATI brand at a disadvantage. Whereby installing the PyhsX driver, 3DMark Vantage uses the GPU to perform PhysX calculations during a CPU test, and this is where things get a bit gray. If you look at the Driver Approval Policy for 3DMark Vantage it states; “Based on the specification and design of the CPU tests, GPU make, type or driver version may not have a significant effect on the results of either of the CPU tests as indicated in Section 7.3 of the 3DMark Vantage specification and white paper.” Did NVIDIA cheat by having the GPU handle the PhysX calculations or are they perfectly within their right since they own Ageia and all their IP? I think this point will quickly become moot once Futuremark releases an update to the test.

Once again the PhysX spector rears its head, so in this case we’ve chosen to test with and without PhysX. We report the score of the currently tested GPU with and without PhysX. Either way you look at it, the XFX GTX-285 remains the fastest single core GPU on the stack. Well it’s not on the stack, it’s in Quadzilla, but you know what we mean.

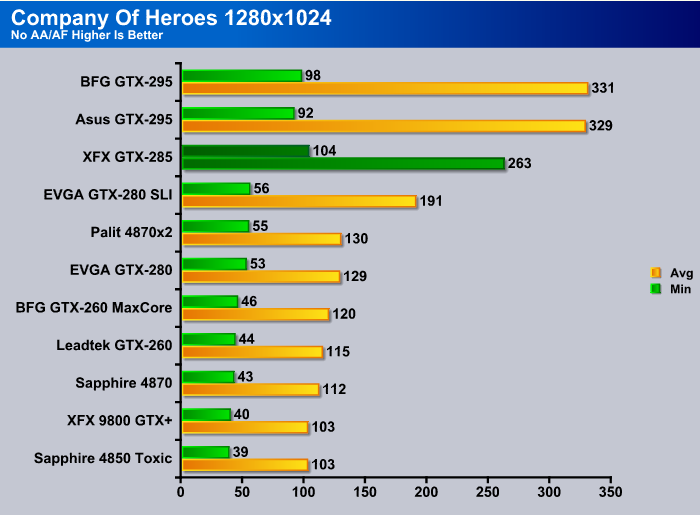

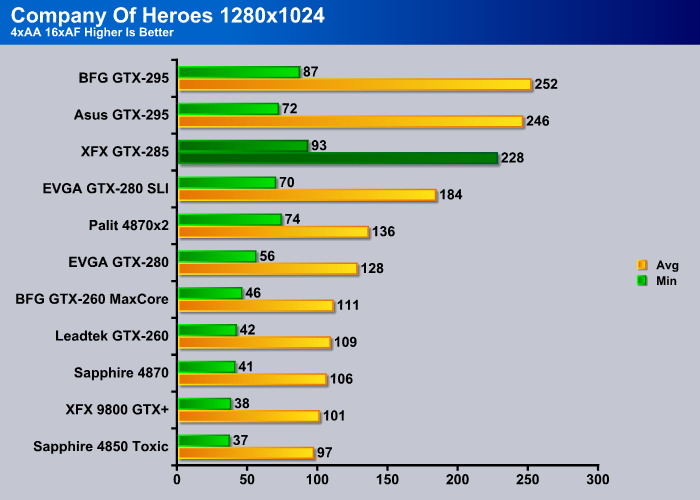

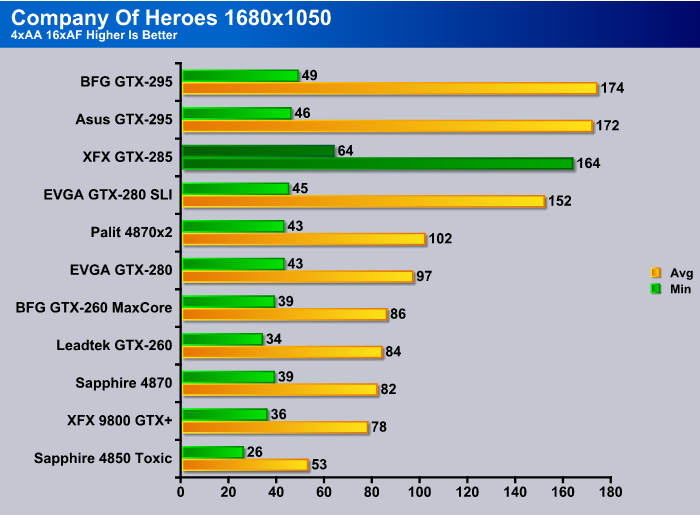

Company Of Heroes v. 1.71

Company of Heroes isn’t known to scale well with SLI or Crossfire and the XFX GTX-285 XXX came in third place but on top the stack of single core GPU’s and even out ran the GTX-280 SLI and Palit 4870×2.

Kicking the resolution up one notch the XFX GTX-285 XXX remains at the top of the single GPU stack and its factory overclocked nature is putting it out ahead of the EVGA GTX-280 by a pretty decent margin.

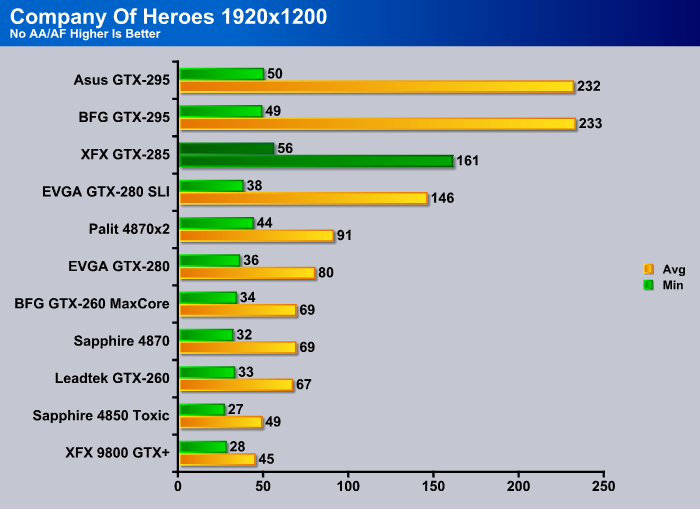

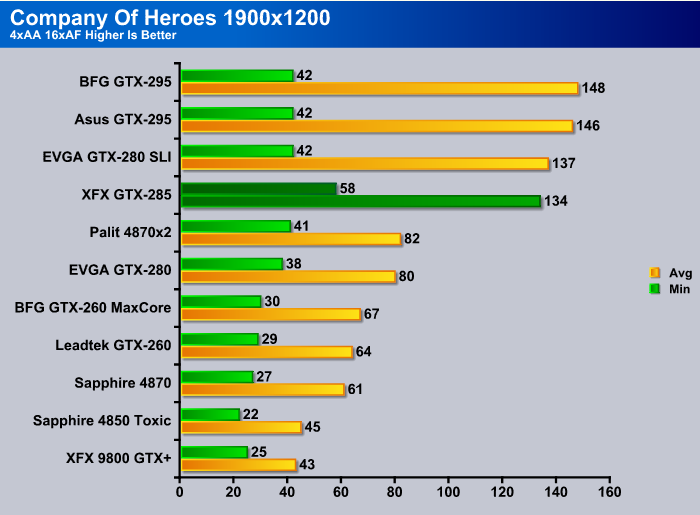

In DirectX 10 at higher resolutions CoH stresses GPU’s pretty well. Even without AA/AF turned on, some of the cards fall below the 30 FPS rate where games playability is affected. The XFX GTX-285 XXX remains at the top of the stack of single core GPU’s and once again CoH prefers the faster single card over SLI.

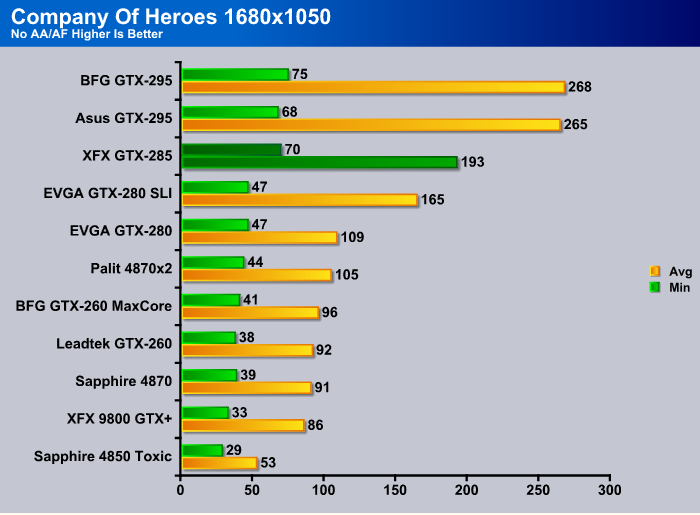

Kicking AA/AF on but backing down to the lowest resolution tested the XFX GTX-285 XXX stays in the lead of single core GPU’s. We could mention that not only is it on the top of the other GPU’s it also looks really good setting on Quadzilla and we’re pretty sure some of the other GPU’s we tested are puffing up their chests, taking offense to its sharp good looks.

Moving to the medium resolution tested where graphics are more GPU intensive than CPU intensive, the XFX GTX-285 lands right there on the top of the stack (of single core GPU’s if you haven’t guessed that inference yet), and shows no indication that it is willing to let go of its position without a fight.

Kicked up as high as we tested with the eye candy turned on the XFX GTX-285 beat the GTX-280 SLI setup in minimum FPS but gave up a few FPS in the Average FPS test. We think that its older brothers, the GTX-280’s, threatened to give it a noogie if it didn’t let them shine just a little. For those of you that aren’t familiar with noogies, apparently you don’t have an older brother(s). That’s where your older brother sneaks up and throws you in a head lock and using his knuckles rubs them briskly with some pressure across your head. Yea you don’t want a noogie, so we don’t blame the XFX GTX-285 XXX for giving up a frame or two to a dual GPU setup.

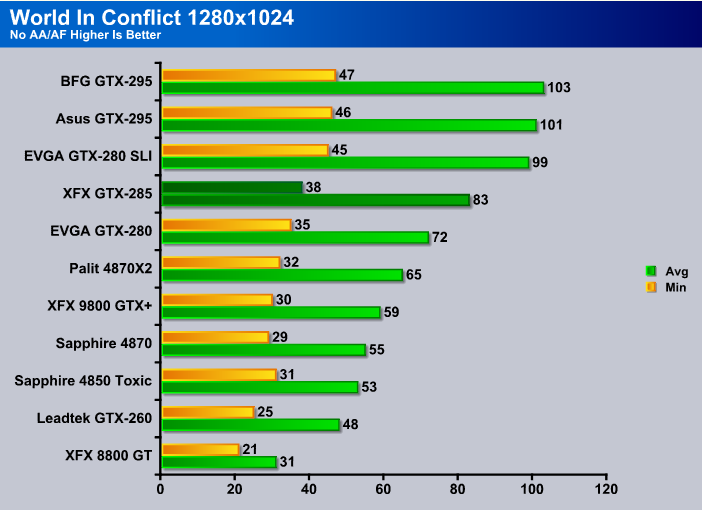

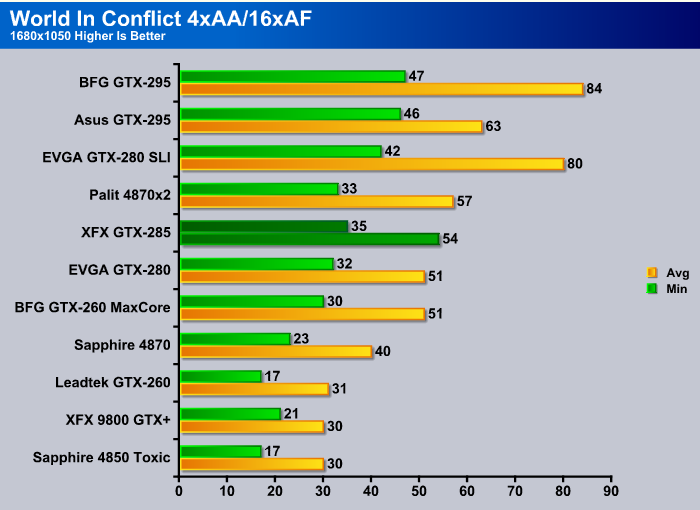

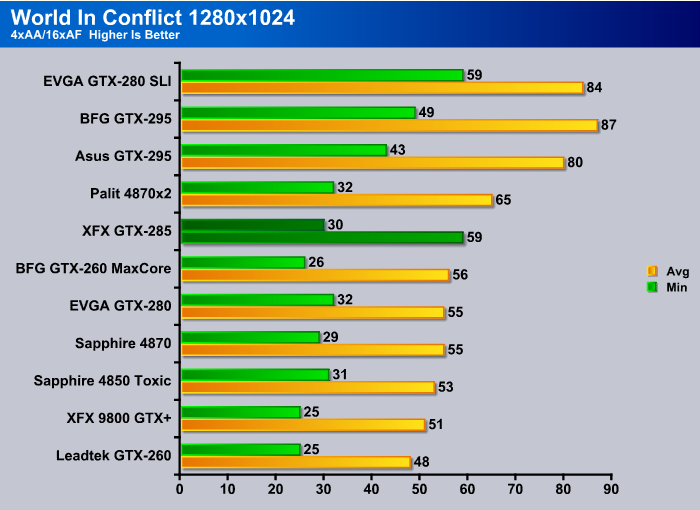

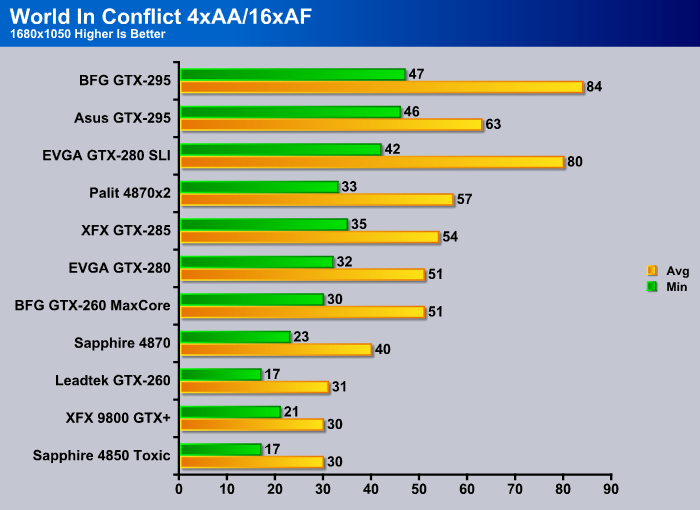

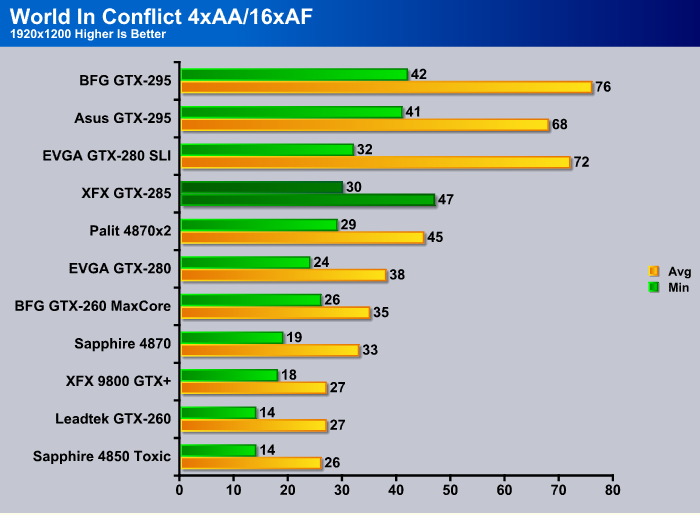

World in Conflict Demo

The XFX GTX-285 XXX stays in the lead of the single core GPU’s. All the new designs of GPU’s and the jargon associated with it has us thinking that maybe we need to come up with some new words to describe them. Single Core GPU, DualCore GPU, Dual Core Dual PCB, and Dual Core Single PCB get a little long and cumbersome to type out all the time. If you’re getting any idea for cool synonyms, head down to the discussion thread and drop the ideas. They might get used in future reviews. That, or we’ll get to make fun of each other which is always fun.

The XFX GTX-285 XXX seems bound and determined to stay at the front of the Single Core GPU line. Even at 1280×1024 where WiC is a little more CPU bound than GPU bound. It does pretty well.

Again sitting about 10% faster than its older brother the GTX-280, the XFX GTX-285 XXX is on top the pile of single Core GPU’s. We like the consistency the XFX is showing.

Well, the XFX GTX-285 XXX is still on top the pile of single core GPU’s but we hit 30 FPS the border of playability where FPS are at rock solid to the human eye. Are we going to see it drop below 30 FPS in this GPU crushing game?

We were just checking your short term memory, remember that at 1280×1024 WiC is more CPU bound than GPU bound and the XFX GTX-285 XXX picks up a few FPS as the CPU constriction lets up at 1680×1050.

You cans see that at 1920×1200 a lot of the tested GPU’s drop below 30 FPS with the eye candy turned on. The XFX GTX-285 XXX remains at 30 FPS and on top the single Core GPU pack. Any time a GPU does that in this GPU crushing game, we’re suitably impressed.

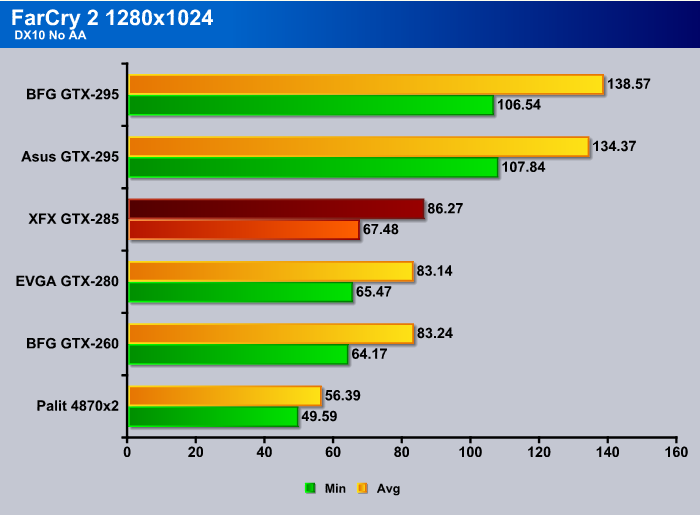

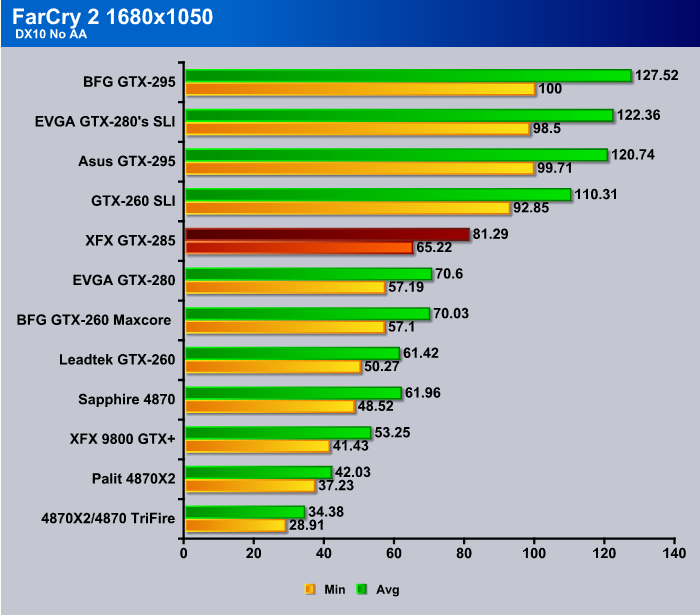

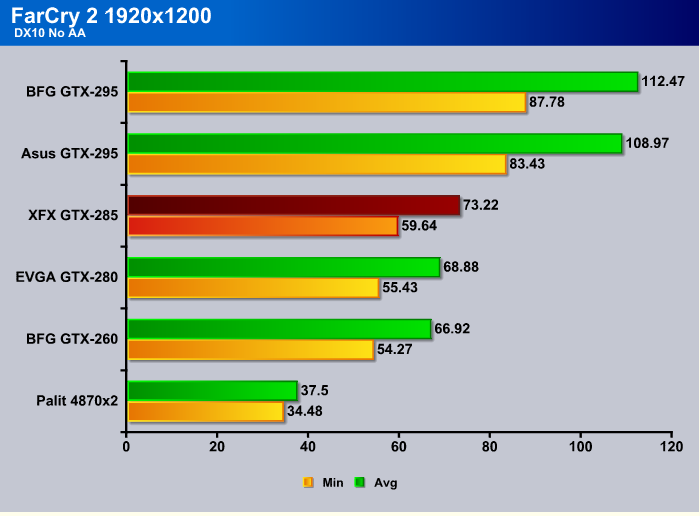

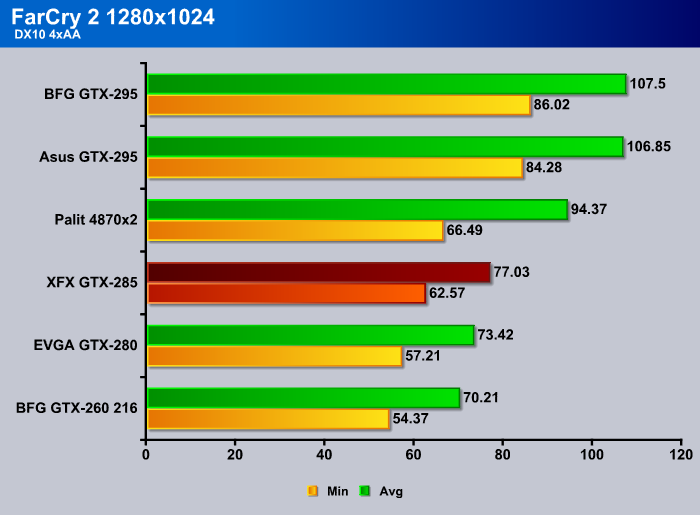

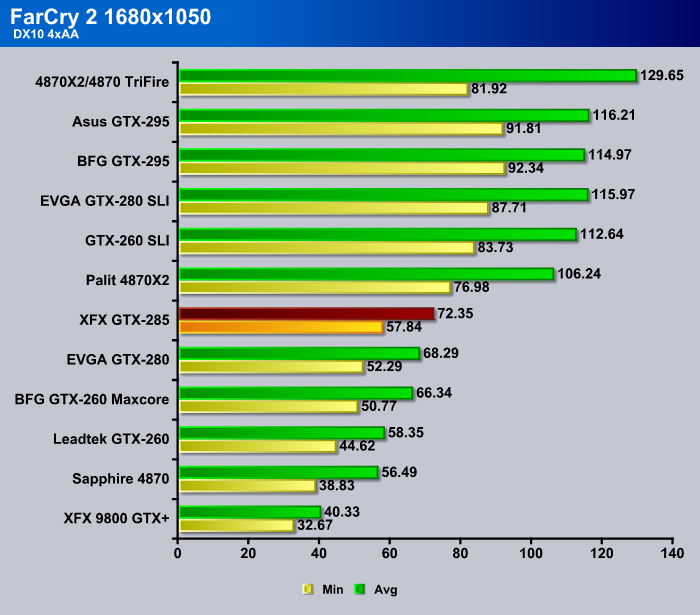

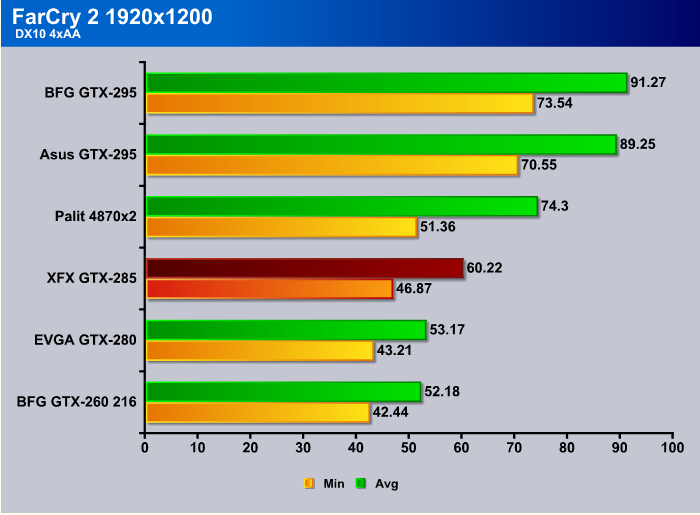

Far Cry 2

Coming into FarCry 2 we’ve expanded the chart from the last GPU review to include some more GPU’s. Rest assured, as time allows, we’ll cover the whole stack. We had Nvidia drivers installed this go around so we tested a couple more Nvidia GPU’s. Next time around we’ll expand the ATI lineup in this epic title and complete our FarCry 2 charting with the whole stack.

The XFX GTX-285 XXX comes in on the top of the single Core GPU category. Just to give you an idea of testing a little deeper than the surface explanation we usually post, here we have six GPU’s in one game, three resolutions at two quality settings for a total of six combined resolution/quality tests. So we have six tests, six GPU’s for a total of 36 tests. Each test was run a minimum of three times for a total of 108 bench runs that take about 3 minutes a bench. Then, we have 2 orb products, so that’s 324 minutes for Far Cry 2, 5 Hours 24 minutes just benching FarCry 2, and another hour twenty for orb products per GPU, so another 8 hours for ORB (FutureMark) products or 13 hours 24 minutes to bench six GPU’s. Then, you have adding and averaging the scores, checking that by rerunning the numbers, chart building, chart correcting, then coding and proofing the review. By the end of the process you have about 40-60 hours in a review across a minimum of 5 -7 days, usually longer. So you’re thinking that’s slow, use those same numbers to extrapolate across 4-6 games going with best case four games. Four games 5 hours 20 minutes, 21 hours 20 minutes benching games, then the Futuremark time for benching, 29 hours 20 minutes total bench time. Throw in the whole GPU stack at once and your a brain dead Red Bull chugging zombie. That’s why we are incrementally including GPU’s and changing the game lineup. To redo the whole stack with a fresh rack of games might take as long as a month. So, the next time you think it must be nice to be a reviewer, keep this polite little blurb in mind. Then, keep in mind that driver updates require more benching to be done and you’ll quickly see there’s no free lunch.

We’re getting used to seeing the XFX GTX-285 XXX on top the stack of single core GPU’s. On average we’d have to say about 10-15% faster than a single GTX-280. Take into consideration the power savings from the shrunk die 55nm design and we’re beginning to think we’re looking at a winner here.

Coming to the end of the FarCry 2 no AA/AF testing the XFX GTX-285 XXX is still on the throne of the single core class GPU’s. You have to like a GPU that performs this well and at advertised percentages above previous generations.

The XFX GTX-285 XXX once again comes in on top the single core GPU stack at 1280×1024 with AA/AF turned on. The dual GPU cards come in ahead of it as expected, but comparing dual core GPU cards to single core GPU cards is apples and oranges. The XFX GTX-285 XXX comes in at the top of its class.

With the expanded data we have on FarCry 2, at 1680×1050 with AA/AF turned on, at first glance you might think that the XFX GTX-285 XXX is middle of the road. In reality, despite its chart placement, it’s still the fastest single card on the chart. Every thing above it is a Multiple GPU setup or Dual core GPU card.

Highest resolution tested on the GTX-285 XXX it’s still on top the other single core GPU’s and while that might change in a test or two later on, it’s consistent placement in the first three games we’ve looked at and good scores in 3DMakr06 and Vantage are quickly earning it the Crown of King of the single core GPU class.

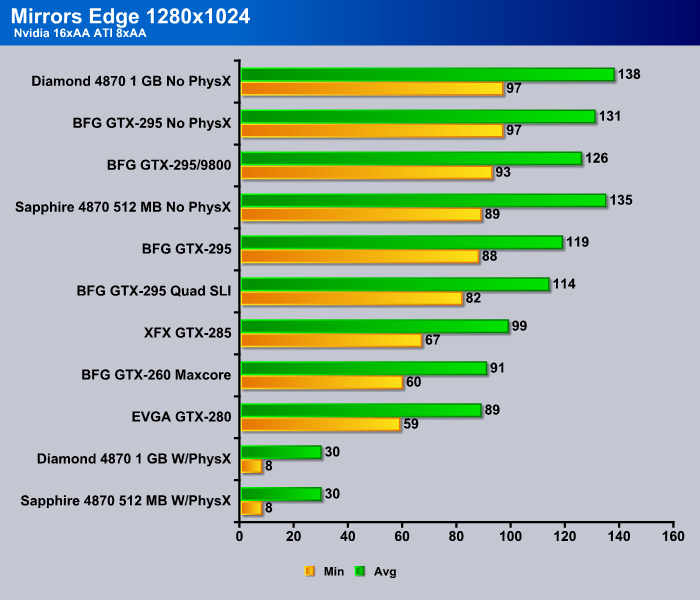

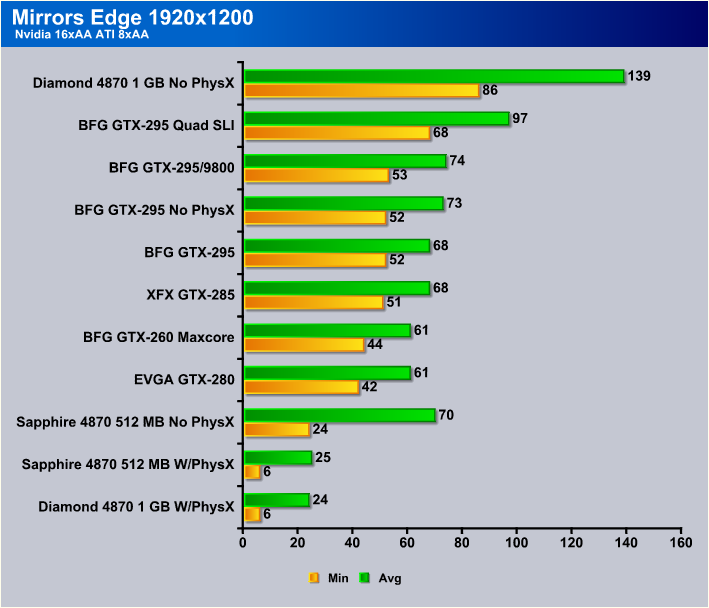

Mirror’s Edge

Mirror’s Edge is a first person action-adventure video game developed by EA Digital Illusions CE (DICE). The game was released on PlayStation 3 and Xbox 360 in November 2008. A Windows version was also released on January 13, 2009. The game was announced on July 10, 2007, and is powered by the Unreal Engine 3 with the addition of a new lighting solution, developed by Illuminate Labs in association with DICE. The game has a realistic, brightly-colored style and differs from most other first-person perspective video games in allowing for a wider range of actions—such as sliding under barriers, tumbling, wall-running, and shimmying across ledges—and greater freedom of movement, in having no HUD, and in allowing the legs, arms, and torso of the character to be visible on-screen. The game is set in a society where communication is heavily monitored by a totalitarian regime, and so a network of runners, including the main character, Faith, are used to transmit messages while evading government surveillance.

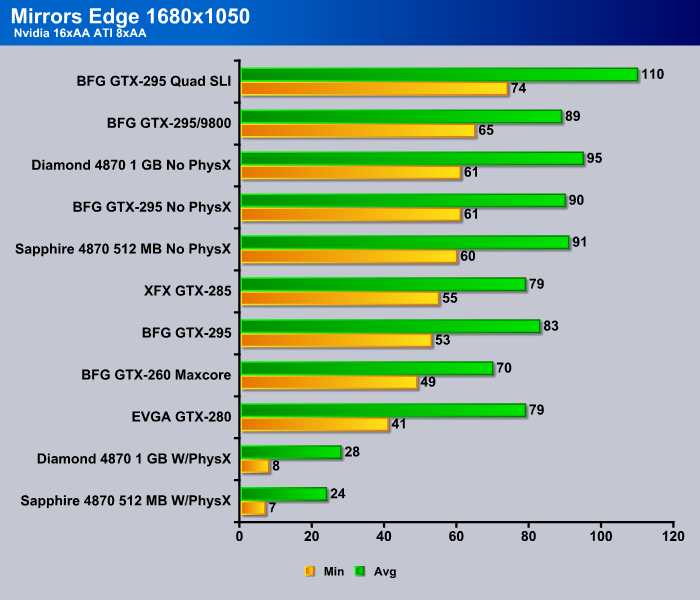

Mirror’s Edge uses PhysX. We are fully aware of every implication of PhysX in benchmarking. PhysX at this time is Nvidia specific. Mirror’s Edge, however, also allows for PhysX to be run on the CPU, and allows for PhysX to be turned off. We’re not sure if Mirror’s Edge is going to be used in our benchmarking suite. If it is used as part of our benchmarking suite, it will be with PhysX considered as an additional quality setting and the lack of PhysX on ATI cards will not be held against ATI. We deliberately used different AA/AF setting in this rack of benches. We used 8xAA for ATI cards and 16xAA for Nvidia cards. Both are the highest possible settings for each brand and representative of the highest quality of eye candy you can get using each brand. At this time we are not using Mirror’s Edge in consideration of any scoring of any GPU, but merely as information that needs to be put forth. We are not reporting Benches without AA/AF because, frankly, the engine is optimized well enough that we don’t feel the need to compromise and lower quality settings, which in some cases, would skyrocket the FPS. We want to see eye candy results. We reserve the right to change our stance on Mirror’s Edge at any point and if any changes are made we will notify you of the changes at the time they are made. Let the complaining commence.

Keep in mind that these results are on an Uber High End System, Intel 965 Extreme on an Asus P6T Deluxe running 1866 Dominator and a 128 GB Patriot SSD. Your results might vary but this is what Quadzilla churned out. The results on the Diamond and Sapphire cards with PhysX enabled dropped frame rates down to 8 FPS and averaged at 30 FPS. The bench was choppy, so we’d say try a run with PhysX enabled to see what it looks like in game, but then turn PhysX off and play the game. Once PhysX is disabled, ATI cards do pretty well. The Nvidia cards we ran with PhysX and at a higher AA/AF setting, 8x for ATI and 16x for Nvidia. When we tested without PhysX on Nvidia cards we made note of it in the chart. The ATI 4870 512 did well at this resolution, but with 512 MB of memory we’re pretty sure it will bog down some a little later. Mirror’s Edge liked the ATI 4870 1 GB card and it turned in the highest frame rates with PhysX turned off. That was at the lower AA/AF setting, so if you want to run both ATI and Nvidia at 8x to see the differences you can, but we’re not going there ourselves at this time.

For fun we ran a bunch of Nvidia cards, and ran the GTX-295/9800GTX+ as a GPU and dedicated PhysX card. The combination picked up a few frames but isn’t the optimal combination of cards. A lesser but capable card the BFG GTX-260 216 with the 9800GTX+ would be optimal. All the Nvidia cards did really well with and without PhysX enabled.

Moving up the resolution isn’t going to relieve the bottleneck of trying to run PhysX on ATI cards so if you want to run PhysX, we’d recommend an add-on Agiea PhysX card, if you can find one, or an Nvidia GPU. That’s dependant on if you want the additional eye candy. At 1680×1050 with PhysX disabled you shouldn’t have any problem with playability.

Once again, all the Nvidia GPU’s had no problem with or without PhysX. If you notice though, the GTX-260 216 came out a little better than the GTX-280 on Minimum FPS, but the GTX-280 did better on Average FPS. That may be an indication that Mirror’s Edge favors GPU’s with larger amounts of memory.

When we went to the maximum resolution tested, the Sapphire 4870 512 dropped in frame rates drastically, confirming that Mirror’s Edge like a lot of video memory. The Diamond 4870 1 GB broke out and churned frames like there was no tomorrow. All of the Nvidia cards did pretty well.

We refrained form using the 4870×2 in this rack. The only way to enable both cores on the card at this time is to rename the Mirror’s Edge EXE file to the name of a game that has an existing Crossfire profile. We’re not going to report data on the 4870×2 using another game’s Crossfire profile. We are told and have read that it works, but if it gives you a problem on the 4870×2, then you can disable AI in CCC and it will disable the second core and let the 4870×2 run as a 4870 1GB single core if you like. Otherwise, you can rename the EXE file to FarCry2 or MassEffect and it should run both cores. It did for us with varying degrees of success.

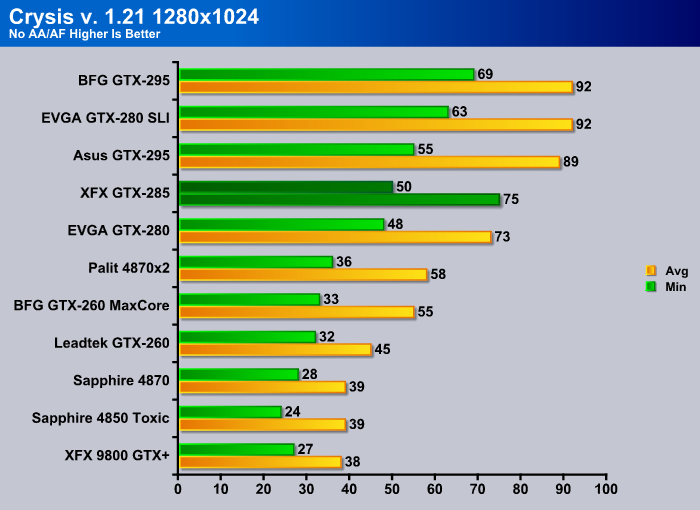

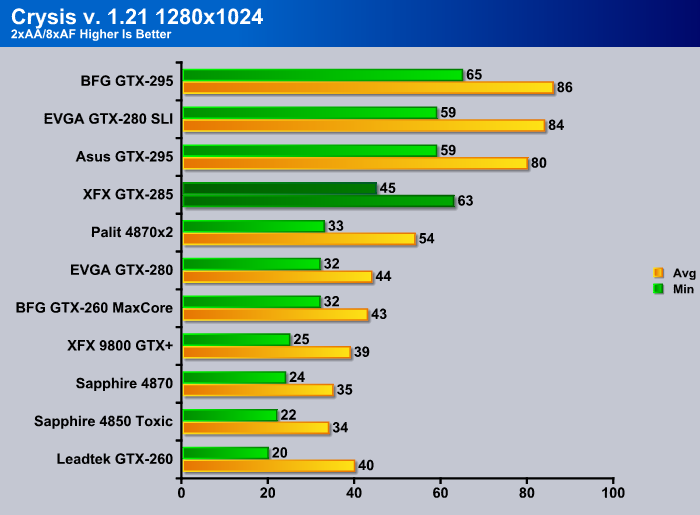

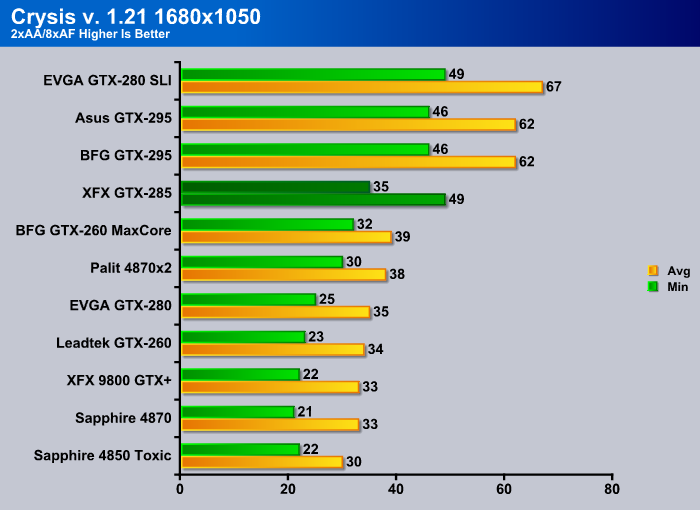

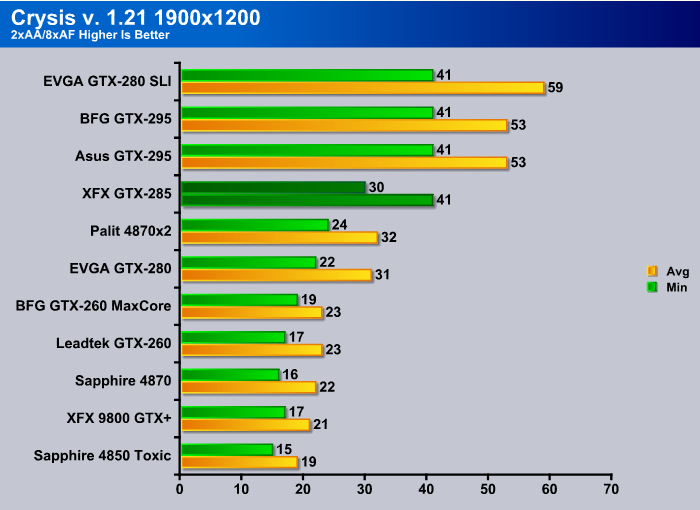

Crysis v. 1.2

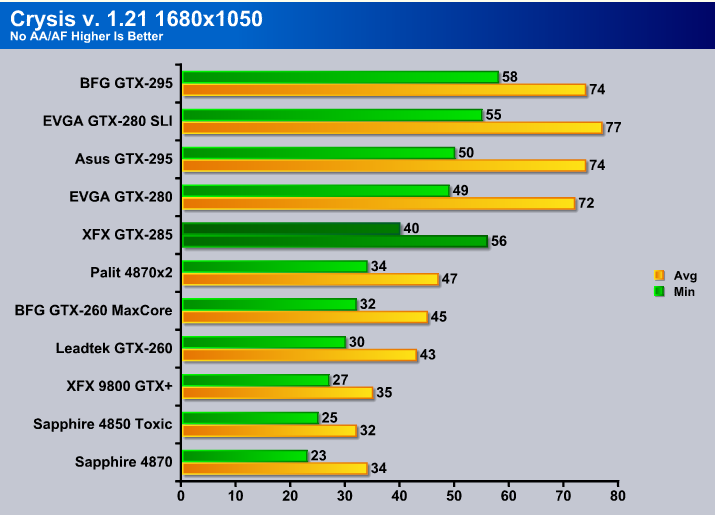

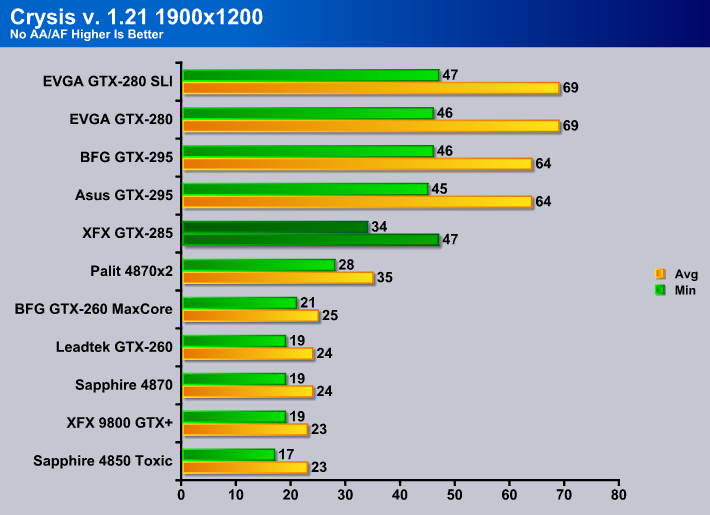

Here’s where things start to get weird. You won’t see it in this resolution and it’s why we mentioned that some tests were run more than three times. The XFX GTX-285 XXX stayed on top the GTX-280 in this test.

Then the GTX-280 popped over the GTX-285 at 1680×1050, with the GTX-285 XXX staying so consistently on top in all the tests previous to Crysis, we’d have to say driver optimization or anomaly.

Then, again here we see the GTX-280 way up there in the charts. We can’t say why we got the anomaly, but we plan on retesting the GTX-280 in Crysis again to see what we get. That would involve another clean Vista load, and we can’t delay the review for a single anomaly in one game, but if we get updated information we’ll update you.

The weirdness abates here and the XFX GTX-285 XXX comes back to the top of the stack of Single GPU cards, so we might be seeing a hint of a driver issue with no AA in Crysis. This early in a card’s driver evolution, it’s hard to tell.

Remaining above 30 FPS isn’t something most GPU’s do at 1680×1050 in Crysis with the AA/AF on. The XFX GTX-285 did a good job of remaining on top the stack.

Highest resolution with eye candy cranked the XFX remained in the playable realm and we were somewhat astonished. Finally a single core GPU that keeps frame rates up in this GPU killing behemoth.

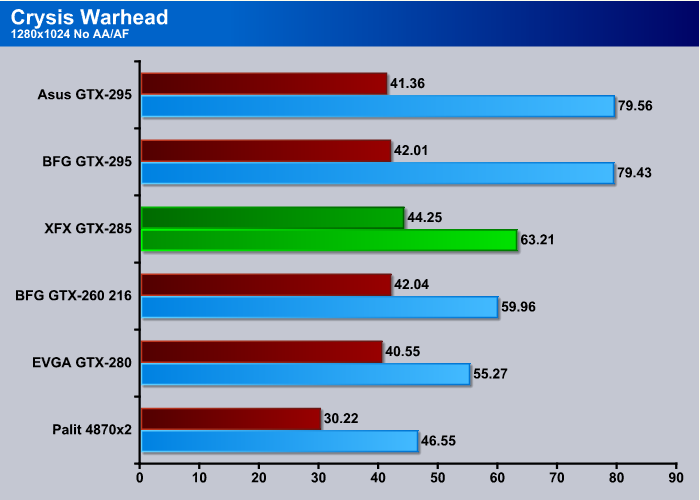

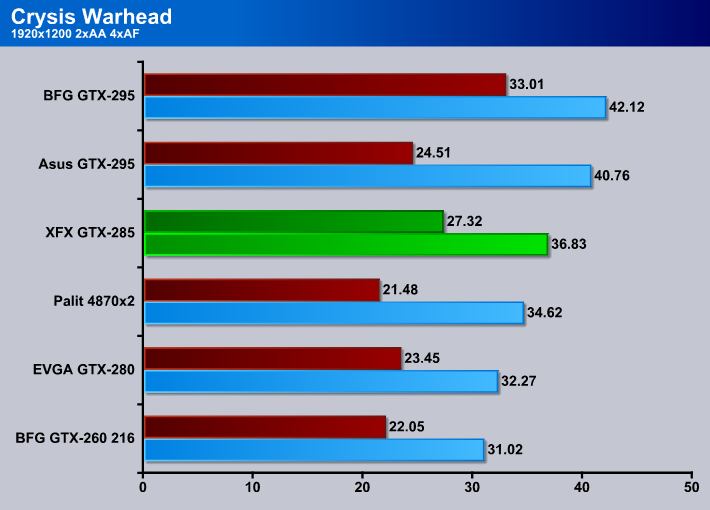

CRYSIS WARHEAD

Crysis Warhead is the much anticipated sequel of Crysis, featuring an updated CryENGINE™ 2 with better optimization. It was one of the most anticipated titles of 2008.

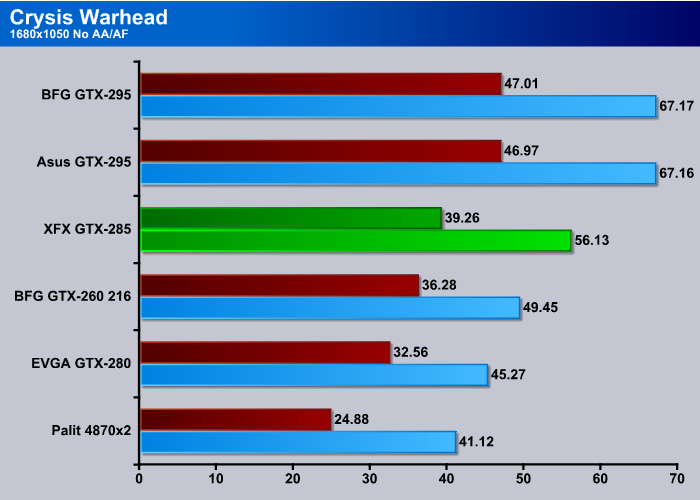

By this point we were a little tired of the gold and green standard charts, so we went for a little something different and changed up colors. When it comes to charts it doesn’t take much change to thrill us but when you devote a whole day to charting you get a little punchy, so it’s a nice change for us. Coming into Warhead at the lowest resolution you can already tell that Crysis Warhead is a good measure of a GPU’s raw power. The XFX GTX-285 XXX, despite the minor problem we saw in Crysis, comes in first among the single core GPU’s.

No change here at 1680×1050. The XFX GTX-285 XXX, being the single fastest card throughout testing in a conglomerate sense we’ve come to expect its rightful place is at the top of the single core stack.

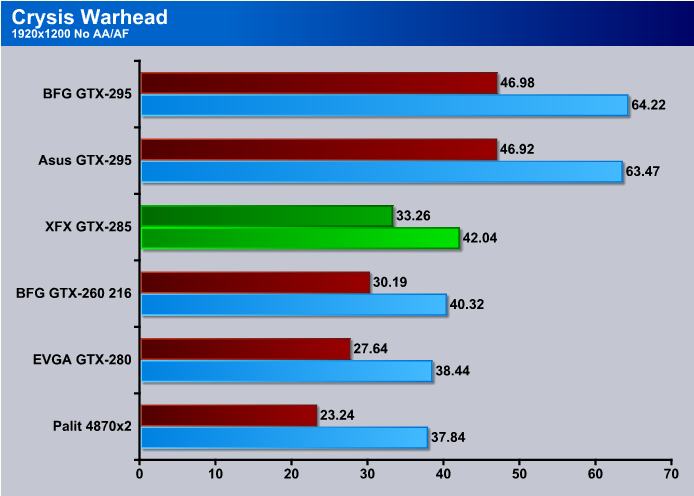

With that minor exception in Crysis, the GTX-285 stays on top the single GPU (Single core) stack. When it comes to raw power, we expect to see some dipping in FPS in Warhead with the eye candy turned on. The improved CryEngine 2 we’ve seen touted hasn’t been showing its optimization in benching and often performs below the original Crysis. That doesn’t mean the game engine is worse. It could mean the graphics are more intensive.

Still sitting on the throne of single core GPU’s, the XFX GTX-285 XXX is looking more and more like the performance single core GPU of choice for demanding enthusiasts.

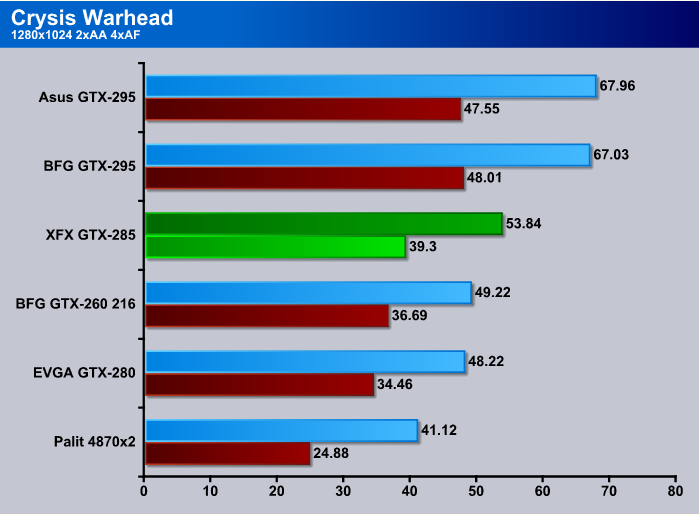

No changes at 1680×1050 eye candy cranked up. We’re still above the 30 FPS for rock solid game play. You can see that three major GPU’s are below that level already and we have no doubt that more below 30 FPS action is coming.

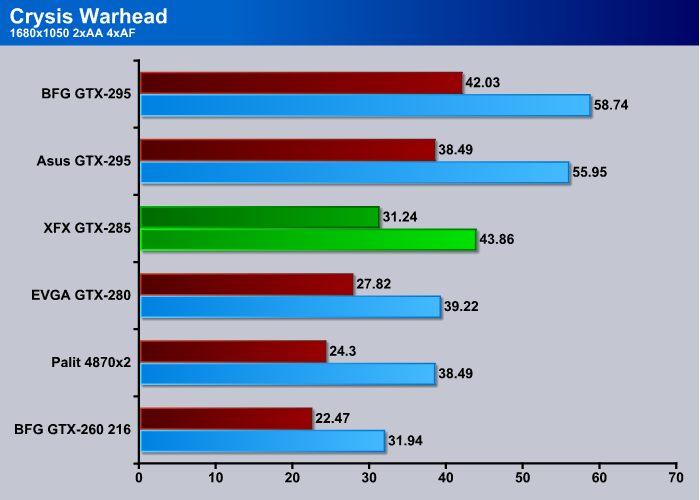

There you have it, sub 30 FPS, but it took Crysis Warhead and DirectX 10 to drop it below 30 FPS, and even then we didn’t see any noticeable dips in frame rates the human eye could detect. We like the eye candy that DirectX 10 can toss out there but we really think Microsoft and the DirectX 10 team dropped the ball on making it work well. The combination of DirectX 10 and the CryEngine 2 proves to much for most single core GPU’s. You would think that a company headed by the second richest man in the world could afford to optimize the DirectX 10 standard better. Or if it came to it, tear it down and redo it. I know we wouldn’t put our complete stamp of approval on DirectX 10. We would encourage updates to it that provide better performance, but Microsoft has trouble seeing over that stack of cash in the front office.

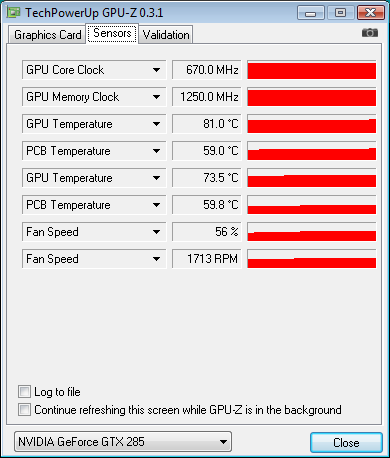

TEMPERATURES

To get our temperature reading, we ran 3DMark Vantage looping for 30 minutes to get the load temperature. To get the idle temp we let the machine idle at the desktop for 30 minutes with no background tasks that would drive the temperature up. Please note that this is on an open test station, so your chassis and cooling will affect the temps your seeing.

| GPU Temperatures | |||

| Idle | Load | ||

| 48°C | 81° C | ||

A small table just doesn’t seem to cut it so we are also including the GPU-Z sensor reading which matched a couple of other utilities we use.

The idle at the desktop for 30 minutes with no background tasks running that would increase temperatures yielded a temperature of 48° C and after 30 minutes of looping 3DMark Vantage 81° C. The factory overclocked nature of the XFX GTX-285 XXX edition will tend to run it a little warmer than a reference card. As you can see though, the drivers ramped up the fan to 56% and we saw that percentage going up and down as the card heated up and cooled off, so variable fan speed should be fine for most uses.

POWER CONSUMPTION

To get our power consumption numbers we plugged in out Kill A Watt power measurement device and took the Idle reading at the desktop during our temperature readings. We left it at the desktop for about 15 minutes and took the idle reading. Then, during the 30 minute loop of 3DMark Vantage we watched for the peak power consumption, then recorded the highest usage.

| GPU Power Consumption | |||

| GPU | Idle | Load | |

| XFX GTX-285 XXX | 215 Watts | 322 Watts | |

| BFG GTX-295 | 238 Watts | 450 Watts | |

| Asus GTX-295 | 240 Watts | 451 Watts | |

| EVGA GTX-280 | 217 Watts | 345 Watts | |

| EVGA GTX-280 SLI | 239 Watts | 515 Watts | |

| Sapphire Toxic HD 4850 | 183 Watts | 275 Watts | |

| Sapphire HD 4870 | 207 Watts | 298 Watts | |

| Palit HD 4870×2 | 267 Watts | 447 Watts | |

| Total System Power Consumption | |||

The XFX GTX-285 consumed about 24 Watts less at an idle and 23 Watts less at load than the GTX-280. We saw spikes hit 350 momentarily, but most of the time at load we were at 322. Keep in mind this is total power consumption on an ever changing setup, so mileage will vary.

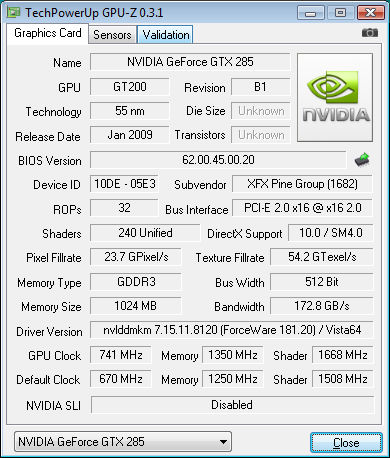

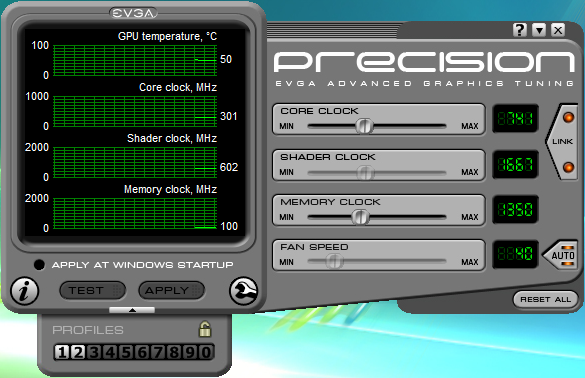

OVERCLOCKING

We expect the XFX GTX-285 XXX to OC a little better than the previous generation of GTX-280’s. It’s 55nm design makes it a better OCing candidate.

We managed to OC the XFX GTX-285 XXX from it’s factory OCed state of 670MHz Core to 741MHz. Was that the maximum OC we achieved stable? Not quite. There’s a little more left to get if you want to go to the raggedy edge. You’re better off to find the max stable OC and back down a little bit because running on the raggedy edge is when a lot of equipment damage happens. We managed a pretty decent memory OC, from 1250 to 1350. Again, that’s not quite the maximum. If you want to kick the fans up to 100% and go for some suicide runs you can get a little more out of it.

We verified our OC using ATITool to scan for artifacts for 30 minutes and checked it against EVGA Precision. We wanted to include this shot so you can see the Core downclocking itself to 301, and the shader to 602, and memory to 100 MHz. The extraordinary power savings mode on this beauty works like a charm. All the speed and power of the previous generation 65nm GTX-280, better overclockabilty, and lower power consumption. We’re looking at a winner here.

CONCLUSION

The XFX GTX-285 XXX edition performed exactly like we expected. The 55nm shrink on the core gave us better overclocking, with less power consumption and 10-15% better performance. It’s a really nice looking card and for those of you with short term memory problems, here’s a drool shot of the attractive GPU.

The XFX GTX-285 XXX gives end users the graphics goodness they crave, excellent FPS in the most demanding games, and power savings to boot. Its increased performance over the previous generation is a welcome boost to its raw power and is to date the fastest single core GPU we’ve ever seen.

We are trying out a new addition to our scoring system to provide additional feedback beyond a flat score. Please note that the final score isn’t an aggregate average of the new rating system.

- Performance 10

- Value 9

- Quality 10

- Warranty 10

- Features 10

- Innovation 10

Pros:

+ Runs cool enough

+ Able to run Triple SLI

+ Out-performs GeForce GTX-280

+ Good bundle (including FarCry 2)

+ Good overclocking

+ Double Lifetime Modder/Overclocker Friendly Warranty

Cons:

– May be too large for some cases/systems

– Addiction to high quality Graphics with blazing frame rates

With the XFX GTX-285 XXX’s good power consumption, 10-15% performance increase over the previous generation, great overclocking ability, Double Lifetime Warranty that’s Modder and overclocker friendly, fantastic frame rates, and mind blowing graphics goodness, the XFX GTX-285 receives a 9.5 out of 10 and the Bjorn3D.com Golden Bear Award.

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996

Bjorn3D.com Bjorn3d.com – Satisfying Your Daily Tech Cravings Since 1996